Sure! Here’s a revised version with synonyms and altered sentence structures while preserving the original HTML format:

“`html

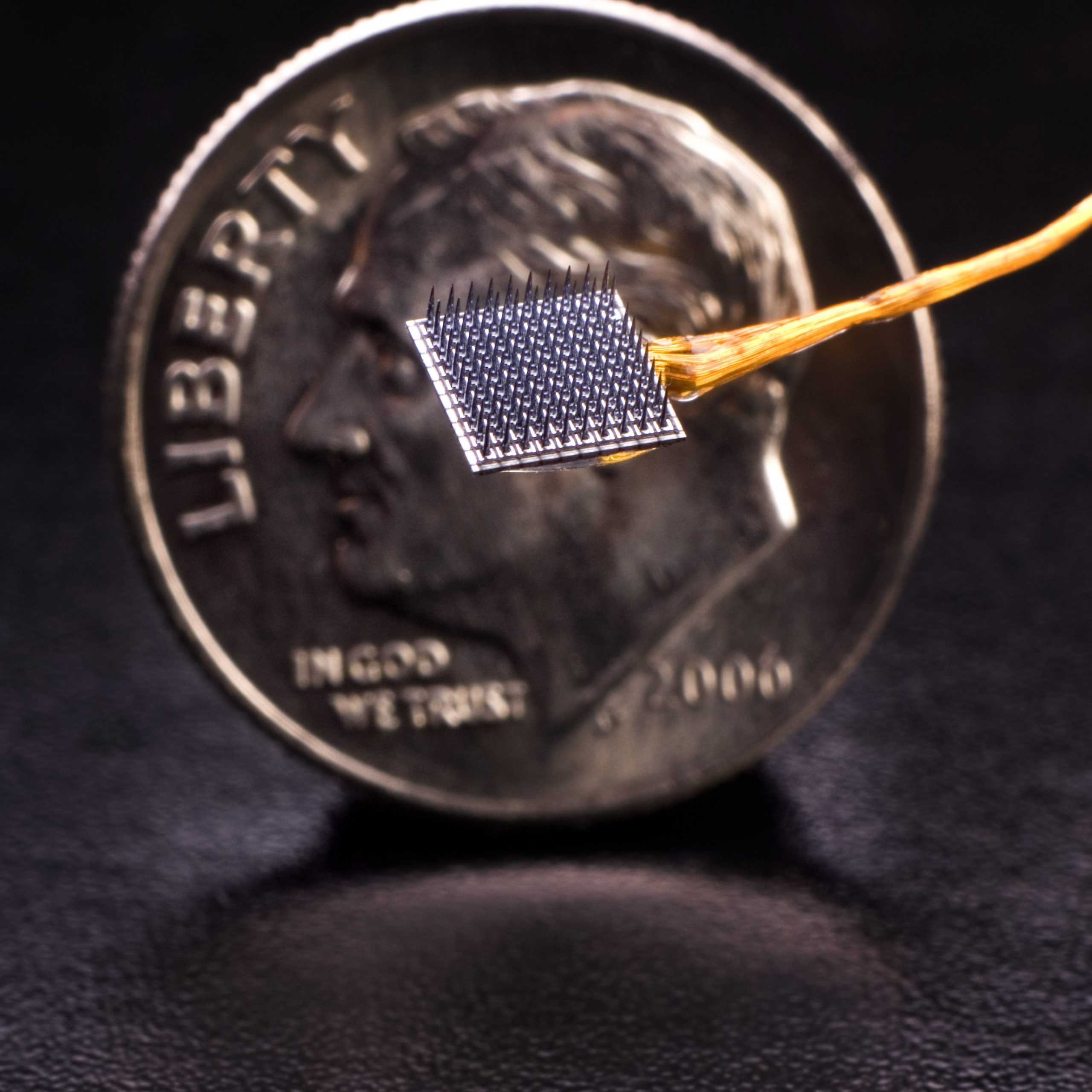

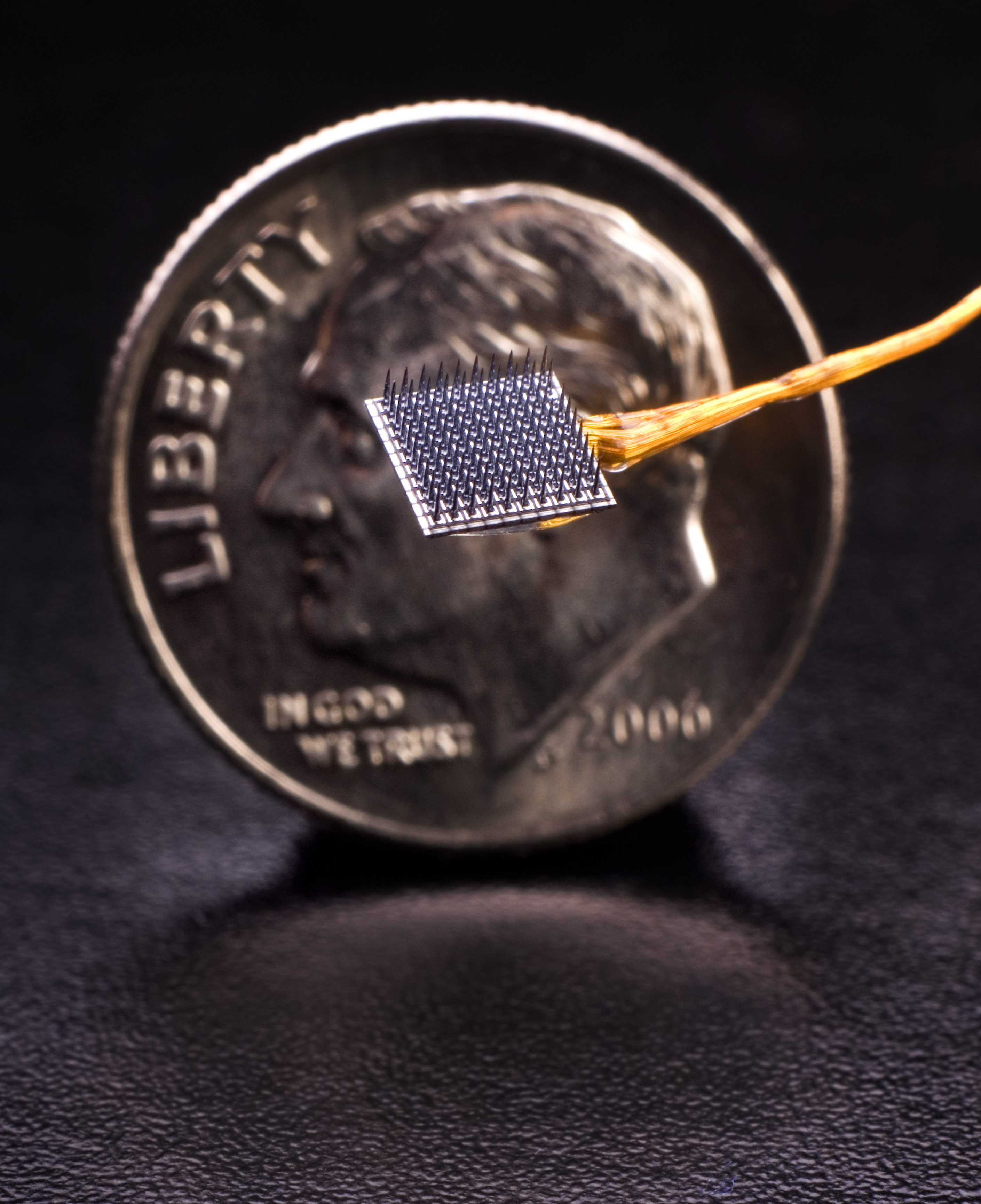

Depiction of an electrode array similar to those inserted in the brains of study participants

Image courtesy of Daniel Rubin

For numerous years, individuals with paralysis have employed brain-computer interfaces to convert neural impulses into actions by merely contemplating the tasks they wish to perform: entering text, manipulating robotic arms, generating speech. However, recent studies indicate that these interfaces could also interpret not just planned speech but also internal thoughts. Kind of.

This is a significant advancement for those facing communication difficulties, stated Daniel Rubin, a contributor to the study published in the journal Cell.

“Communication is fundamentally a key element of our humanity,” remarked Rubin, an instructor at Harvard Medical School and a neurologist at Mass General. “And thus, any method we can utilize to help restore communication is an avenue to enhance quality of life.”

The research is built on decades of efforts from BrainGate, a longstanding, multi-institution clinical trial. Initial trials allowed individuals with paralysis to complete various tasks—typing characters, navigating a computer cursor, operating a mechanical limb—through an implanted brain-computer interface (BCI). Participants envisioned moving their hands up, down, left, or right while tiny sensors in the motor cortex interpreted those intentions. In recent times, researchers speculated whether instead of decoding the desired movement of the hand, wrist, and arms, they might decode the intended movement of the muscles we utilize for speech — the face, mouth, jaw, and tongue.

The answer is affirmative — but this is only possible due to advancements in artificial intelligence.

“It’s a considerably divergent computational issue to contemplate decoding speech when juxtaposed with decoding hand movements,” Rubin articulated.

“It’s a considerably divergent computational issue to contemplate decoding speech when juxtaposed with decoding hand movements.”

Daniel Rubin

To ease the obstacle, participants endeavored to vocalize predetermined sentences. Electrode arrays implanted on their motor cortices detected signals corresponding to 39 English phonemes, or fundamental speech sounds. Machine learning models then constructed those sounds into the most probable words and phrases.

“It’s executing this somewhat probabilistically,” explained study co-author Ziv Williams, an associate professor at Harvard Medical School and a neurosurgeon at MGH. “For instance, if recording the neurons in the brain reveals both a ‘D’ sound and a ‘G’ sound, they are likely attempting to say ‘dog.’”

Upon confirming that algorithms could decode intended speech, researchers — spearheaded by the BrainGate team at Stanford University, alongside Williams, Rubin, and their Harvard/MGH associate Leigh Hochberg — pivoted to internal dialogue. Prior examinations indicated that silently rehearsing words engages similar regions of the motor cortex as intended speech, although at a reduced signal strength.

In one of several trials investigating the decoding of inner dialogue, researchers instructed participants to observe a grid of colored shapes — specifically, green circles, green rectangles, pink circles, and pink rectangles. The team theorized that when requested to only count the pink rectangles, participants would internally articulate the counts as they scanned the grid. Across numerous trials, a decoder learned to capture number words from those unspoken counts.

However, challenges arose when researchers attempted to decode unstructured internal thought. When participants were posed open-ended autobiographical questions — for instance, “Reflect on a memorable vacation you’ve enjoyed” — the decoders predominantly generated noise.

For Rubin, this delves into the essence of thought itself and the constraints of examining another’s mind. “When I’m reflecting, I perceive my own voice articulating words; I constantly have an internal monologue,” he explained. “But this isn’t necessarily a universal experience.” Many individuals don’t hear words when they think internally, he noted; those who primarily communicate through sign language may perceive thoughts as visual representations of hand movements.

“It touches on an aspect of neuroscience that we are just beginning to truly understand,” Rubin stated. “This idea that a representation of speech is likely distinct from a representation of language.”

“It touches on an aspect of neuroscience that we are just beginning to truly understand.”

Daniel Rubin

Future implants are expected to house ten times as many electrodes within the same area, significantly broadening the neural signals researchers can access, Rubin stated. He anticipates it will create substantial benefits for prospective users.

“What we lay out is, due to the nature of this research, we can’t assure that everything will function flawlessly,” he noted. “All our participants acknowledge this, and they express, ‘It would be fantastic if this becomes something beneficial for me.’ However, they are genuinely committed to ensuring that individuals with paralysis years from now will have a superior experience and enhanced quality of life.”

For some of the participants, it is already making a difference. Two of the four individuals highlighted in the study utilize their BCI as their main method of communication.

“`