“`html

Identifying written content via artificial intelligence poses a complex challenge: Achieving the right approach involves effectively recognizing AI-generated text while avoiding unwarranted allegations against human authors. Yet, few instruments maintain an ideal equilibrium.

A cohort of scholars at the University of Michigan claims to have crafted a novel method to ascertain whether a segment of text produced by AI fulfills both criteria—an innovation that could be especially beneficial in academic settings and public policy as AI-generated content becomes increasingly indistinct from that created by humans.

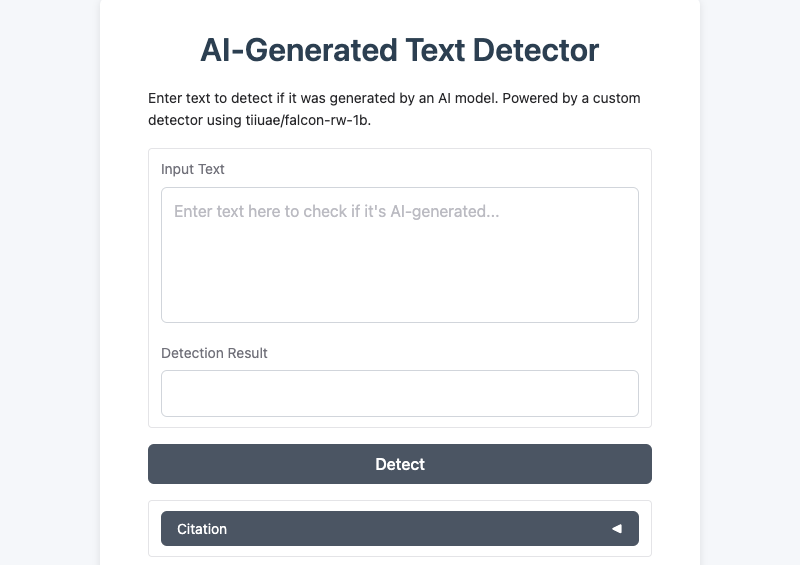

The researchers have dubbed their tool “Liketropy,” drawing from the theoretical framework of their technique: It amalgamates likelihood and entropy, two statistical concepts that underpin its assessment.

They developed “zero-shot statistical assessments,” which can ascertain whether a text was authored by a person or a Large Language Model without needing previous training on examples from either source.

The existing tool concentrates on LLMs, a particular category of AI that generates text. It leverages statistical characteristics of the text itself, such as the predictability or surprise of the words utilized, to determine if it appears more human-like or machine-produced.

During evaluations on extensive datasets—even those where the models were concealed from the public or designed to outsmart detectors—researchers assert their tool exhibited commendable performance. When the assessment is tailored for specific LLMs as potential authors of the text, it achieves an average accuracy above 96% and a false accusation frequency as low as 1%.

“We were very purposeful in not creating a detector that merely assigns blame. AI detectors can exhibit overconfidence, which is perilous—particularly in education and policymaking,” remarked Tara Radvand, a doctoral candidate at U-M’s Ross School of Business and co-author of the study. “Our objective was to remain vigilant against false allegations while still identifying AI-generated material with statistical assurance.”

Among the researchers’ surprising insights was how minimally they needed to understand a language model to effectively detect it. The test functioned well, defying the belief that detection must depend on access, training, or collaboration, Radvand noted.

The team was driven by principles of fairness, particularly for international students and non-native English speakers. Emerging research indicates that students who learn English as a second language may be unjustly identified for “AI-like” writing due to tone or sentence constructs.

“Our tool can assist these students in self-assessing their writing in a low-stakes, transparent manner prior to submission,” Radvand explained.

Looking ahead, she and her collaborators aim to enhance their demo into a tool adaptable for various sectors. They have discovered that domains like law and science, alongside applications such as college admissions, have different thresholds in the “cautious-effective” balance.

A significant purpose for AI detectors is to curtail the dissemination of misinformation on social media. Certain tools deliberately instruct LLMs to adopt extreme viewpoints and propagate misinformation on these platforms to sway public perception.

Given that these systems can generate vast quantities of false information, the researchers assert it is vital to create dependable detection tools that can flag such content and remarks. Early recognition aids platforms in limiting the spread of harmful narratives and preserving the integrity of public discourse.

Additionally, they intend to engage with U-M business and university leaders regarding the likelihood of incorporating their tool as an adjunct to U-M GPT and the Maizey AI assistant to verify if text was produced by these tools or an external AI model, like ChatGPT.

Liketropy garnered a Best Presentation Award at the Michigan Student Symposium for Interdisciplinary Statistical Sciences, an annual event organized by graduate students. It was also highlighted by Paris Women in Machine Learning and Data Science, a France-based community of women interested in machine learning and data science that hosts various events.

Radvand’s co-authors included Mojtaba Abdolmaleki, also a doctoral student at the Ross School of Business; Mohamed Mostagir, associate professor of technology and operations at Ross; and Ambuj Tewari, a professor in U-M’s Department of Statistics.

“`