Envision a radiologist assessing a chest X-ray from a fresh patient. She observes that the patient has inflammation in the tissue but does not have an enlarged heart. Aiming to expedite the diagnosis, she might utilize a vision-language machine-learning model to look for reports from comparable patients.

However, if the model mistakenly identifies reports containing both conditions, the probable diagnosis could be remarkably different: If a patient presents with tissue inflammation and an enlarged heart, the issue is very likely cardiac-related; yet, without an enlarged heart, various underlying causes could exist.

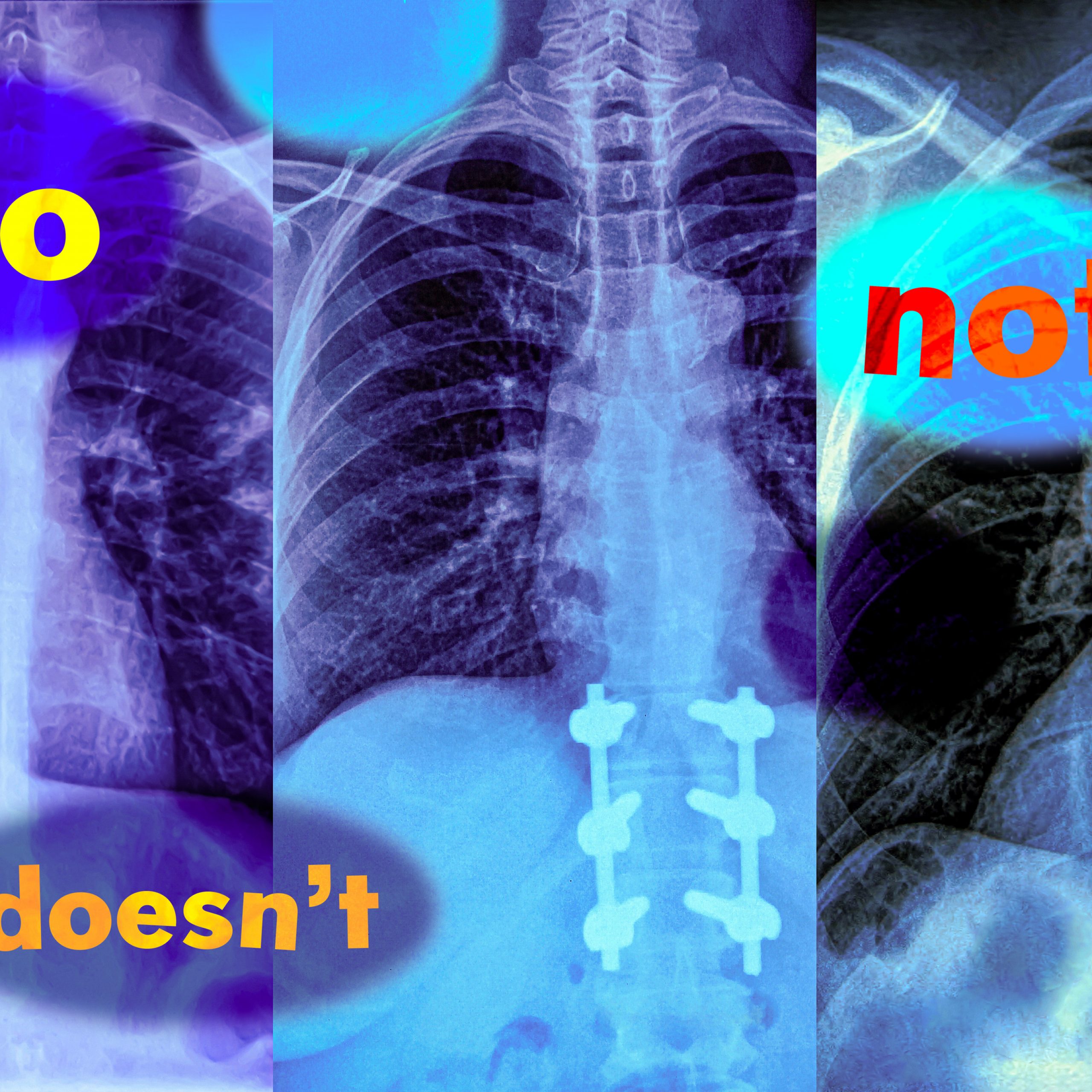

In an innovative investigation, MIT scientists have discovered that vision-language models are highly prone to error in practical scenarios due to their lack of understanding of negation — terms like “no” and “doesn’t” that signify what is incorrect or missing.

“These negation terms can have a substantial influence, and if we rely on these models indiscriminately, we might encounter severe repercussions,” asserts Kumail Alhamoud, an MIT graduate student and primary author of this research.

The researchers evaluated the capability of vision-language models to detect negation in image captions. The models often performed similarly to random chance. Building on these insights, the team produced a dataset of images paired with captions that include negation terms describing absent objects.

They demonstrate that retraining a vision-language model with this dataset results in performance enhancements when asked to retrieve images devoid of certain objects. It also increases accuracy in multiple-choice question answering involving negated captions.

Nonetheless, the researchers warn that additional effort is necessary to address the underlying issues of this challenge. They hope their findings raise awareness among potential users regarding a previously overlooked flaw that could have significant implications in high-stakes environments where these models are currently employed, from deciding which patients receive specific treatments to detecting product flaws in manufacturing facilities.

“While this is a technical paper, there are broader concerns to contemplate. If something as fundamental as negation is flawed, we should refrain from employing large vision/language models in many current applications — without thorough evaluation,” remarks senior author Marzyeh Ghassemi, an associate professor in the Department of Electrical Engineering and Computer Science (EECS) and a participant in the Institute of Medical Engineering Sciences and the Laboratory for Information and Decision Systems.

Ghassemi and Alhamoud are accompanied in the paper by Shaden Alshammari, an MIT graduate student; Yonglong Tian of OpenAI; Guohao Li, a former postdoctoral researcher at Oxford University; Philip H.S. Torr, a professor at Oxford; and Yoon Kim, an assistant professor of EECS and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT. The research will be presented at the Conference on Computer Vision and Pattern Recognition.

Overlooking negation

Vision-language models (VLM) are developed using vast collections of images and related captions, which they learn to encode as numerical sets called vector representations. The models use these vectors to differentiate between various images.

A VLM employs two distinct encoders — one for text and another for images — and the encoders learn to generate comparable vectors for an image and its associated text caption.

“The captions convey what exists within the images — they are a positive label. And that is exactly the core issue. No one gazes at an image of a dog leaping over a fence and captions it as ‘a dog leaping over a fence, without helicopters,’” explains Ghassemi.

Due to the absence of negation examples in the image-caption datasets, VLMs never acquire the ability to identify it.

To investigate this issue further, the researchers devised two benchmark tasks to evaluate VLMs’ capability to comprehend negation.

In the first, they employed a large language model (LLM) to re-caption images from an existing dataset by instructing the LLM to consider related objects not present in an image and include them in the caption. They then assessed the models by prompting them with negation words to retrieve images containing certain objects, but not others.

For the second task, they crafted multiple-choice questions asking a VLM to select the most suitable caption from a set of closely related options. These captions vary only by including a reference to an object that is absent in the image or negating an object that is present in the image.

The models frequently struggled with both tasks, with image retrieval performance diminishing by nearly 25 percent with negated captions. When tackling multiple-choice questions, even the most effective models achieved only about 39 percent accuracy, with several models performing at or below random chance.

One contributing factor to this failure is a shortcut the researchers term affirmation bias — VLMs overlook negation words and concentrate on objects depicted in the images instead.

“This issue isn’t restricted to terms like ‘no’ and ‘not.’ Regardless of how negation or exclusion is expressed, the models will simply disregard it,” Alhamoud states.

This trend was consistent across every VLM they examined.

“A solvable challenge”

Since VLMs are generally not trained on image captions featuring negation, the researchers generated datasets with negation terms as an initial step toward addressing the issue.

Using a dataset containing 10 million image-text caption pairs, they prompted an LLM to generate relevant captions that specify what is omitted from the images, yielding new captions with negation terms.

They had to ensure that these synthetic captions remained natural-sounding, or it might cause a VLM to fail in real-world scenarios when encountering more intricate captions composed by humans.

They discovered that finetuning VLMs with their dataset resulted in performance enhancements across the board. It improved models’ image retrieval capabilities by about 10 percent, while also increasing performance in the multiple-choice question answering task by approximately 30 percent.

“However, our solution is not flawless. We are merely recaptioning datasets, a form of data augmentation. We haven’t even modified how these models function, yet we hope this serves as a signal that this is a solvable issue and others can take our solution and refine it,” Alhamoud remarks.

Concurrently, he aspires that their work motivates more users to contemplate the problem they intend to utilize a VLM for and create relevant examples to test it prior to deployment.

In the future, the researchers could expand on this study by instructing VLMs to process text and images independently, potentially enhancing their ability to grasp negation. Moreover, they could develop additional datasets that contain image-caption pairs tailored for specific applications, such as healthcare.