“`html

When ChatGPT or Gemini provide what appears to be an authoritative answer to your pressing inquiries, you might not comprehend the vast amount of data these systems utilize to formulate that response. Like other widely used generative artificial intelligence (AI) frameworks, these chatbots depend on foundational systems known as foundation models that train on billions, or even trillions, of data inputs.

In a related context, engineers aspire to develop foundation models that instruct a variety of robots on new abilities such as grasping, relocating, and placing items in environments like residences and manufacturing facilities. The challenge lies in the difficulty of gathering and transferring instructional data across different robotic platforms. You could train your system by manually operating the hardware step-by-step using technologies like virtual reality (VR), but this can be a lengthy process. Training on online videos is less informative, as the clips do not provide a step-by-step, specialized task illustration for specific robots.

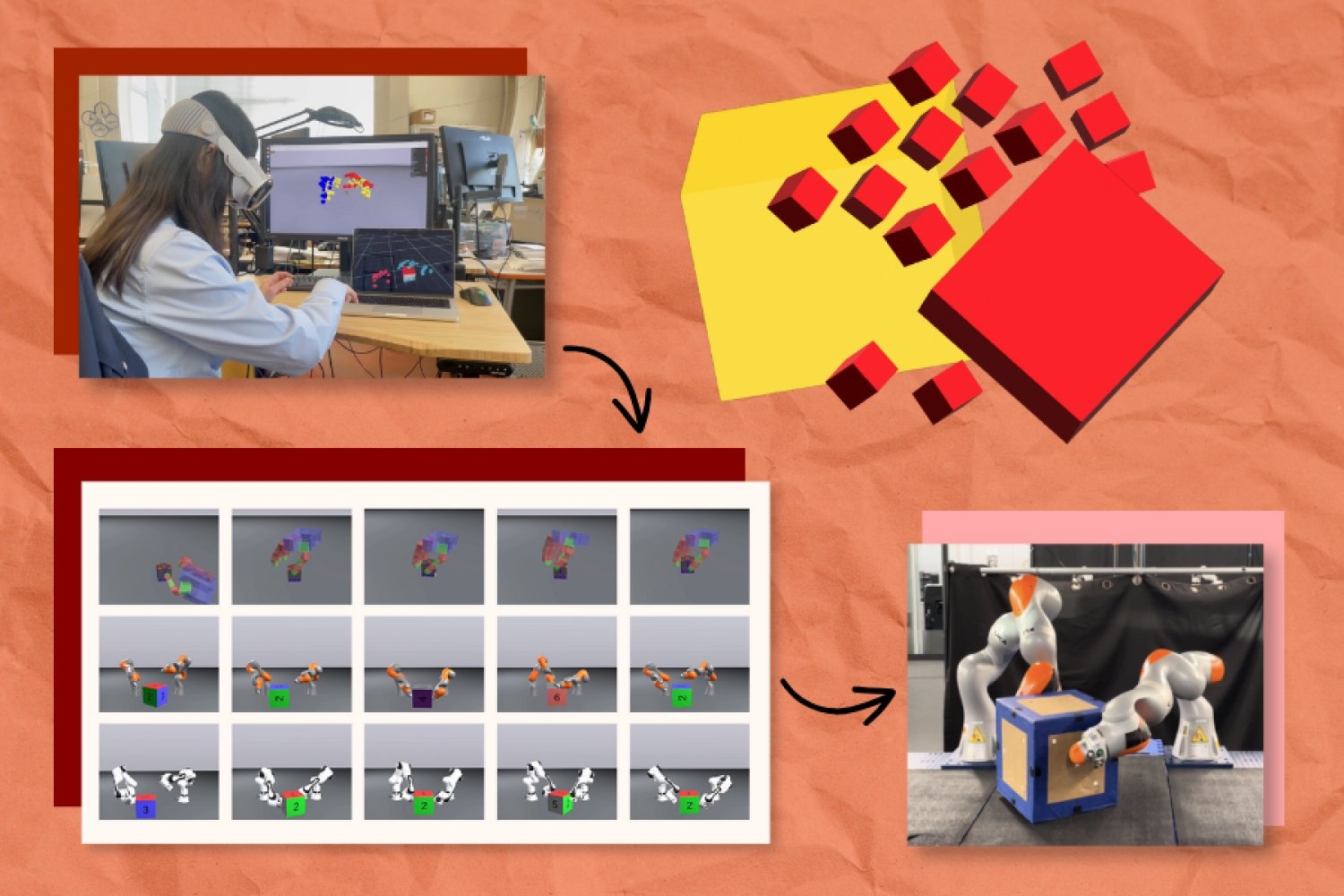

A simulation-based method dubbed “PhysicsGen” from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Robotics and AI Institute tailors robot training data to assist robots in discovering the most effective movements for particular tasks. This system can transform a few dozen VR demonstrations into nearly 3,000 simulated experiences per device. These high-fidelity instructions are then aligned with the exact configurations of mechanical entities such as robotic arms and hands.

PhysicsGen generates data that generalizes to specific robots and conditions through a three-phase process. Initially, a VR headset observes how humans manipulate objects like blocks with their hands. These interactions are simultaneously represented in a 3D physics simulator, illustrating the critical points of our hands as small spheres that reflect our movements. For instance, if you turned a toy over, you’d see 3D shapes indicating different sections of your hands rotating a virtual version of that item.

The pipeline then remaps these points to a 3D representation of the configuration of a specific machine (such as a robotic arm), positioning them at the exact “joints” where a system pivots and rotates. Finally, PhysicsGen employs trajectory optimization — essentially simulating the most efficient actions to accomplish a task — so the robot understands the best ways to execute actions like adjusting the position of a box.

Each simulation serves as a comprehensive training data point that guides a robot through potential methods of handling objects. When implemented into a policy (or the action plan that the robot adheres to), the machine has several strategies to undertake a task and can experiment with different motions if one proves ineffective.

“We’re generating robot-specific data without requiring humans to re-record tailored demonstrations for each device,” states Lujie Yang, an MIT PhD candidate in electrical engineering and computer science and CSAIL affiliate who is the principal author of a new study outlining the project. “We’re scaling the data in an autonomous and efficient manner, making task instructions applicable to a broader array of machines.”

Producing numerous instructional trajectories for robots could ultimately aid engineers in constructing a vast dataset to direct machines like robotic arms and dexterous hands. For instance, the pipeline might facilitate collaboration between two robotic arms in picking up warehouse items and placing them into the correct boxes for deliveries. The system could also enable two robots to work in tandem in a home setting on tasks such as organizing cups.

PhysicsGen’s capabilities also encompass converting data intended for legacy robots or differing environments into valuable instructions for new devices. “Although this data was gathered for a specific type of robot, we can rejuvenate these earlier datasets to enhance their general utility,” adds Yang.

Addition by multiplication

PhysicsGen transformed just 24 human demonstrations into thousands of simulated instances, assisting both digital and physical robots in reorienting objects.

Yang and her colleagues initially evaluated their pipeline in a virtual trial where a floating robotic hand needed to align a block into a target position. The digital robot accomplished the task at an accuracy rate of 81 percent by training on PhysicsGen’s extensive dataset, reflecting a 60 percent improvement compared to a baseline that solely learned from human demonstrations.

The researchers also discovered that PhysicsGen could enhance how virtual robotic arms collaborate to handle objects. Their system generated additional training data that enabled two pairs of robots to successfully complete tasks up to 30 percent more frequently than a purely human-taught baseline.

In an experiment involving two real-world robotic arms, the researchers noted comparable advancements as the machines collaborated to flip a large box into its assigned position. When the robots strayed from the intended path or mishandled the item, they were able to recover mid-task by referencing alternative trajectories from their instructional data library.

Senior author Russ Tedrake, who holds the Toyota Professorship in Electrical Engineering and Computer Science, Aeronautics and Astronautics, and Mechanical Engineering at MIT, notes that this imitation-based data creation method combines the benefits of human demonstrations with the power of robot motion planning algorithms.

“Even a single human demonstration can significantly ease the motion planning challenge,” asserts Tedrake, who is also a senior vice president at the Toyota Research Institute and CSAIL principal investigator. “In the future, perhaps the foundation models will be able to supply this information, and this form of data generation technique will create a post-training framework for that model.”

The future of PhysicsGen

Soon, PhysicsGen may be extended to a new domain: broadening the tasks a machine can perform.

“We would like to employ PhysicsGen to instruct a robot to pour water when it has only been trained to organize dishes, for instance,” explains Yang. “Our pipeline not only generates dynamically feasible motions for known tasks; it also holds the potential to create a diverse collection of physical interactions that we believe can serve as foundational elements for executing entirely new tasks a human hasn’t demonstrated.”

Producing abundant broadly applicable training data could eventually help establish a foundation model for robots, though MIT researchers caution that this is a somewhat long-term objective. The CSAIL-led team is exploring how PhysicsGen can leverage extensive, unstructured resources — like internet videos — as sources for simulation. The objective: convert everyday visual content into rich, robot-ready data that could instruct machines to execute tasks not explicitly demonstrated to them.

Yang and her colleagues also aim to enhance PhysicsGen to be even more beneficial for robots with varying shapes and configurations in the future. To accomplish this, they plan to utilize datasets featuring demonstrations of actual robots, capturing how robotic joints move rather than those of humans.

The researchers also intend to integrate reinforcement learning, where an AI system learns through trial and error, to enable PhysicsGen to expand its dataset beyond merely human-supplied examples. They may augment their pipeline with advanced perception techniques to assist a robot in visually perceiving and interpreting its surroundings, allowing the machine to analyze and adjust to the intricacies of the physical world.

Currently, PhysicsGen illustrates how AI can aid in teaching various robots to manipulate items within the same category, particularly rigid objects. The pipeline may soon assist robots in identifying the optimal methods for handling soft items (like fruits) and deformable ones (like clay), although those interactions remain challenging to simulate at present.

Yang and Tedrake co-authored the paper with two CSAIL colleagues: co-lead author and MIT PhD student Hyung Ju “Terry” Suh SM ’22 and MIT PhD student Bernhard Paus Græsdal. Researchers from the Robotics and AI Institute, including Tong Zhao ’22, MEng ’23, Tarik Kelestemur, Jiuguang Wang, and Tao Pang PhD ’23, also contributed as authors. Their work received support from the Robotics and AI Institute and Amazon.

The researchers recently showcased their findings at the Robotics: Science and Systems conference.

“`