For a machine, the tangible world is quite overwhelming. Interpreting every data point in an environment can require significant computational resources and duration. Utilizing that data to subsequently decide the best way to support a human presents an even more complex challenge.

Currently, MIT roboticists have devised a method to penetrate the data clutter, enabling robots to concentrate on the aspects in a scene that are most pertinent to aiding humans.

Their strategy, aptly named “Relevance,” allows a robot to utilize hints in a scene, such as auditory and visual input, to discern a human’s goal and then swiftly pinpoint the items most likely to be pertinent in achieving that goal. The robot then performs a series of actions to safely present the relevant items or behaviors to the human.

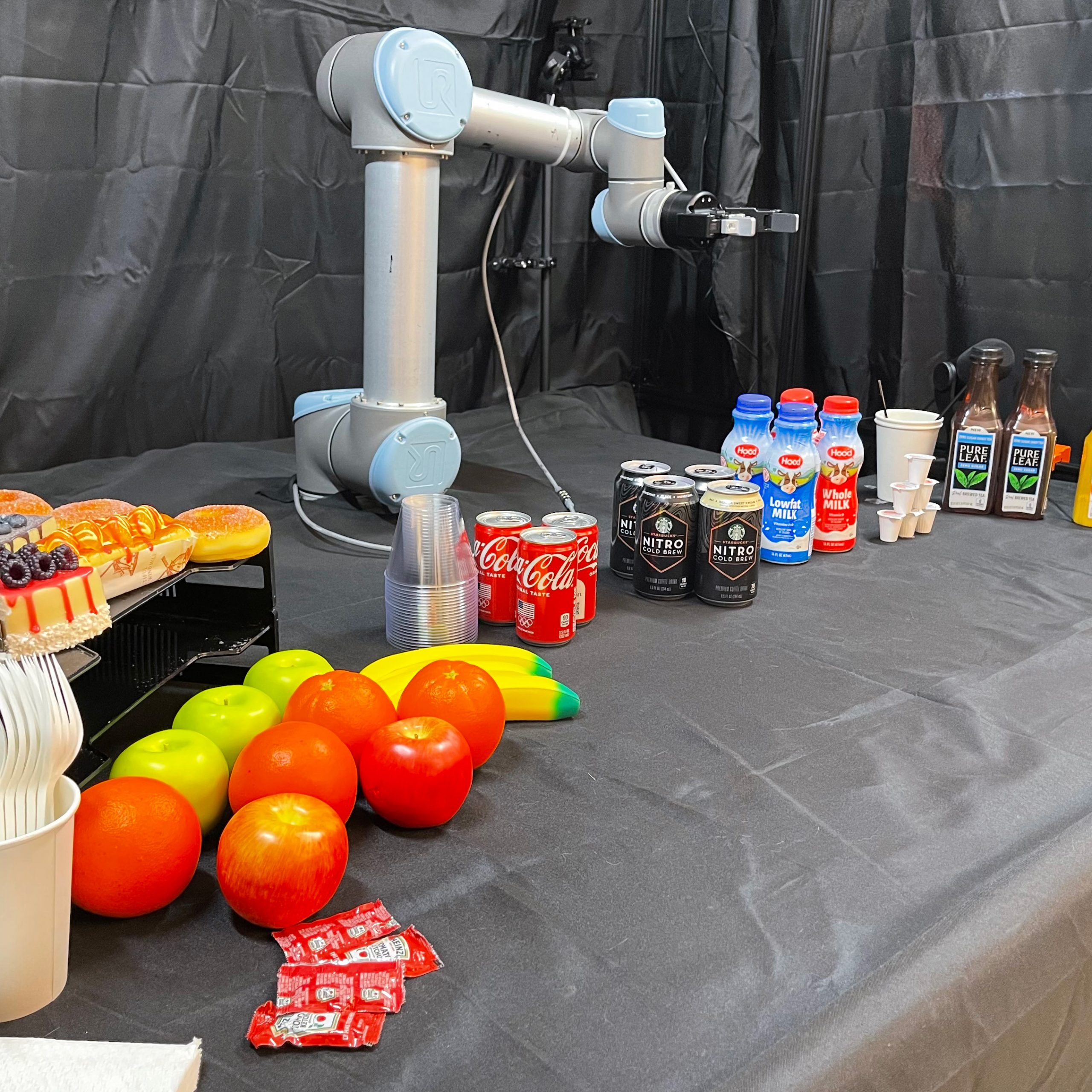

The researchers illustrated this methodology through an experiment that mimicked a conference breakfast buffet. They arranged a table with assorted fruits, beverages, snacks, and dining ware, along with a robotic arm equipped with a microphone and camera. By applying the innovative Relevance strategy, they demonstrated that the robot could accurately recognize a human’s aim and effectively assist them in various scenarios.

In one instance, the robot observed visual signals of a human reaching for a can of pre-prepared coffee, swiftly handing the individual milk and a stirring stick. In another situation, the robot detected a discussion between two individuals speaking about coffee and presented them with a can of coffee and creamer.

Overall, the robot managed to forecast a human’s aim with 90 percent precision and identify pertinent items with 96 percent accuracy. The technique also enhanced the robot’s safety, diminishing the frequency of collisions by over 60 percent when compared to executing the same tasks without utilizing the new methodology.

“This method of enabling relevance could greatly facilitate a robot’s interaction with humans,” says Kamal Youcef-Toumi, a mechanical engineering professor at MIT. “A robot wouldn’t need to inquire with a human about what they require. It would simply absorb information from the environment to determine how to assist.”

Youcef-Toumi’s team is investigating how robots programmed with Relevance can aid in intelligent manufacturing and warehouse environments, envisioning robots collaborating with and intuitively assisting people.

Youcef-Toumi, along with graduate researchers Xiaotong Zhang and Dingcheng Huang, will unveil their new approach at the IEEE International Conference on Robotics and Automation (ICRA) this May. This work builds upon another research paper presented at ICRA in the preceding year.

Establishing focus

The team’s method draws inspiration from our own capacity to discern what’s significant in everyday life. Humans can filter out distractions and concentrate on what matters, due to a region of the brain termed the Reticular Activating System (RAS). The RAS is a collection of neurons in the brainstem that subconciously removes irrelevant stimuli, allowing a person to consciously perceive the pertinent stimuli. The RAS plays a crucial role in avoiding sensory overload, preventing us from fixating on every single object on a kitchen countertop, and instead guiding us to pour a cup of coffee.

“The remarkable aspect is that these neuron clusters filter out everything unnecessary, directing the brain to concentrate on what is significant at that moment,” Youcef-Toumi clarifies. “That’s essentially the core of our proposal.”

He and his team devised a robotic system that broadly emulates the RAS’s capability to selectively process and filter data. The approach comprises four primary stages. The initial stage is a watch-and-learn “perception” phase, during which a robot absorbs auditory and visual signals, for instance from a microphone and camera, that are continuously processed by an AI “toolkit.” This toolkit may contain a large language model (LLM) that interprets audio exchanges to identify keywords and phrases, alongside various algorithms that recognize and categorize objects, humans, physical activities, and task goals. The AI toolkit is designed to operate continuously in the background, similar to the subconscious filtering performed by the brain’s RAS.

The second stage involves a “trigger check,” which serves as a sporadic assessment that the system performs to determine if any significant events are occurring, such as the presence of a human. If a human enters the environment, the system’s third phase will activate. This phase forms the crux of the team’s system, working to identify the features in the environment that are most likely relevant to aid the human.

To define relevance, the researchers created an algorithm that processes real-time predictions generated by the AI toolkit. For instance, if the LLM recognizes the keyword “coffee,” an action-classifying algorithm may categorize a person reaching for a cup as having the objective of “brewing coffee.” The team’s Relevance strategy would use this information to first ascertain the “class” of objects that have the highest likelihood of being relevant to the objective of “brewing coffee.” This might automatically eliminate classes such as “fruits” and “snacks,” in favor of “cups” and “creamers.” The algorithm would then further refine the relevant classes to identify the most pertinent “elements.” For example, based on visual signals from the environment, the system may classify a nearby cup as more relevant — and beneficial — than one that is further away.

In the fourth and concluding phase, the robot would then take the identified pertinent items and devise a route to physically access and present the items to the human.

Assistance mode

The researchers evaluated the new system in trials simulating a conference breakfast buffet. They chose this scenario using the publicly accessible Breakfast Actions Dataset, which includes videos and images of commonplace activities that individuals undertake during breakfast, such as brewing coffee, preparing pancakes, making cereal, and frying eggs. Actions depicted in each video and image are labeled, alongside the overall goal (frying eggs versus brewing coffee).

Utilizing this dataset, the team examined various algorithms in their AI toolkit, such that when faced with a person’s actions in a new environment, the algorithms could accurately label and categorize the human tasks and goals along with the associated relevant items.

During their evaluations, they set up a robotic arm and gripper and directed the system to aid humans as they approached a table laden with diverse drinks, snacks, and dining ware. They observed that when no humans were in sight, the robot’s AI toolkit operated continuously in the background, labeling and categorizing the items on the table.

When, during a trigger check, the robot sensed a human, it became alert, activating its Relevance phase and promptly identifying items in the environment that were most likely to be relevant, based on the human’s goal, as determined by the AI toolkit.

“Relevance can navigate the robot to generate seamless, intelligent, safe, and effective assistance in a highly dynamic setting,” remarks co-author Zhang.

Looking ahead, the team aspires to apply the system to scenarios resembling workplace and warehouse environments, as well as other tasks and goals commonly executed in domestic settings.

“I would like to test this system in my home to see, for example, if I’m reading the newspaper, it could bring me coffee. If I’m doing laundry, it could bring me a detergent pod. If I’m engaged in repairs, it could hand me a screwdriver,” Zhang expresses. “Our vision is to enable human-robot interactions that can be significantly more natural and fluid.”

This research was made feasible through the support and collaboration of King Abdulaziz City for Science and Technology (KACST) via the Center for Complex Engineering Systems at MIT and KACST.