“`html

Within an office at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), a gentle robotic hand meticulously curls its digits to capture a diminutive object. The captivating aspect isn’t the mechanical construction or integrated sensors — in reality, the hand is devoid of any. Instead, the complete system depends on a singular camera that observes the robot’s actions and utilizes that visual information to manage it.

This functionality arises from a novel system developed by CSAIL scientists, presenting an alternative viewpoint on robotic management. Rather than relying on manually crafted models or intricate sensor combinations, it permits robots to comprehend how their forms respond to control signals, exclusively through sight. The methodology, referred to as Neural Jacobian Fields (NJF), grants robots a sort of bodily self-awareness. An open-access publication regarding the research was released in Nature on June 25.

“This research signifies a transformation from programming robots to educating them,” remarks Sizhe Lester Li, a PhD candidate in electrical engineering and computer science at MIT, a CSAIL affiliate, and the principal investigator of the project. “Currently, numerous robotics tasks necessitate significant engineering and coding efforts. In the future, we foresee demonstrating to a robot what to execute and allowing it to autonomously learn how to accomplish the objective.”

The impetus originates from a straightforward yet potent reframing: The primary obstacle to economical, flexible robotics isn’t hardware — it’s managing potential, which could be realized in various manners. Conventional robots are designed to be inflexible and sensor-rich, facilitating the creation of a digital twin, a precise mathematical counterpart utilized for control. However, when a robot is pliable, deformable, or irregularly shaped, those assumptions deteriorate. Instead of compelling robots to conform to our models, NJF reverses the narrative — endowing robots with the capacity to derive their own internal model from observation.

Observe and learn

This separation of modeling and hardware construction could immensely broaden the design possibilities for robotics. In soft and bio-inspired robots, designers frequently integrate sensors or reinforce elements of the structure merely to make modeling achievable. NJF removes that limitation. The system does not necessitate onboard sensors or design alterations to facilitate control. Designers possess greater freedom to investigate unconventional, unbounded morphologies without concern about whether they will be able to model or manage them subsequently.

“Consider how you learn to control your fingers: you wiggle, you notice, you adapt,” explains Li. “That’s what our system accomplishes. It tests random actions and determines which controls influence which segments of the robot.”

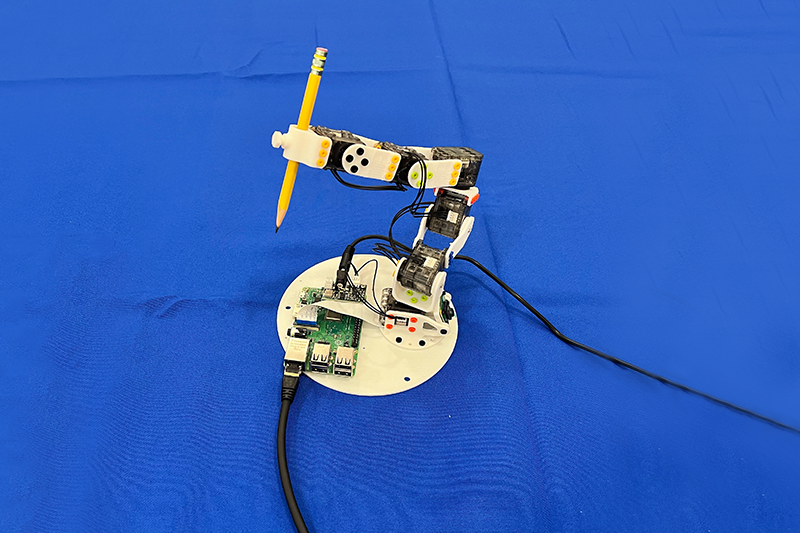

The system has demonstrated resilience across a variety of robot kinds. The team evaluated NJF on a pneumatic soft robotic hand capable of pinching and grasping, a rigid Allegro hand, a 3D-printed robotic arm, and even a rotating platform devoid of embedded sensors. In each instance, the system learned both the robot’s form and how it reacted to control signals, purely through vision and random movement.

The researchers perceive potential extending far beyond the laboratory. Robots configured with NJF could one day manage agricultural tasks with centimeter-level localization precision, function on construction sites without elaborate sensor arrangements, or navigate dynamic landscapes where traditional techniques stumble.

At the heart of NJF lies a neural network that captures two interrelated facets of a robot’s embodiment: its three-dimensional structure and its responsiveness to control inputs. The system builds on neural radiance fields (NeRF), a method that reconstructs 3D scenes from images by aligning spatial coordinates to color and density values. NJF enhances this approach by learning not only the robot’s form, but also a Jacobian field, a function that forecasts how any point on the robot’s body moves in reaction to motor commands.

To train the model, the robot executes random movements while multiple cameras document the results. No human oversight or prior understanding of the robot’s configuration is necessary — the system simply infers the connection between control signals and movement by observing.

Upon completion of training, the robot requires merely a single monocular camera for real-time closed-loop control, operating at approximately 12 Hertz. This enables it to continuously monitor itself, plan, and respond promptly. That speed renders NJF more feasible than many physics-based simulators for soft robots, which are frequently too computationally demanding for real-time application.

In preliminary simulations, even basic 2D fingers and sliders were able to learn this mapping utilizing only a few examples. By modeling how specific points deform or shift in response to actions, NJF constructs a comprehensive map of controllability. That internal model permits it to generalize movement across the robot’s body, even in scenarios where the data is noisy or incomplete.

“What’s remarkably intriguing is that the system determines independently which motors govern which sections of the robot,” states Li. “This isn’t pre-coded — it arises organically through learning, much like an individual discovering the controls of a new device.”

The future is pliable

For decades, robotics has preferred rigid, easily representable machines — such as the industrial arms found in factories — due to their properties simplifying control. However, the field has been shifting toward soft, bio-inspired robots that can adapt to the real world more fluidly. The trade-off? These robots are more challenging to model.

“Robotics today often seems inaccessible due to expensive sensors and intricate programming. Our aim with Neural Jacobian Fields is to reduce the barriers, making robotics economical, versatile, and approachable for a broader audience. Vision is a resilient, dependable sensor,” asserts senior author and MIT Assistant Professor Vincent Sitzmann, who heads the Scene Representation group. “It opens pathways to robots that can function in chaotic, unstructured environments, from farms to construction sites, without costly infrastructure.”

“Vision alone can deliver the cues required for localization and control — removing the necessity for GPS, external tracking systems, or complex onboard sensors. This paves the way for robust, adaptive behavior in unstructured settings, such as drones navigating indoors or underground without maps, mobile manipulators working in cluttered homes or warehouses, and even legged robots traversing uneven ground,” explains co-author Daniela Rus, MIT professor of electrical engineering and computer science and director of CSAIL. “By learning from visual feedback, these systems establish internal models of their own movement and dynamics, enabling flexible, self-supervised operation where traditional localization methods may falter.”

While training NJF currently necessitates multiple cameras and must be redone for each robot, the researchers are already envisioning a more accessible variant. In the future, enthusiasts could record a robot’s random movements with their phone, much like capturing a video of a rental car prior to driving, and utilize that footage to establish a control model, with no prior knowledge or special apparatus necessary.

The system does not yet generalize across different robots, and it lacks force or tactile sensing, restricting its effectiveness on contact-intensive tasks. However, the team is investigating new strategies to address these constraints: enhancing generalization, managing occlusions, and extending the model’s capacity to reason over lengthier spatial and temporal contexts.

“Just as humans cultivate an intuitive grasp of how their bodies move and react to commands, NJF provides robots with that type of embodied self-awareness through vision alone,” states Li. “This understanding is a foundation for flexible manipulation and control in real-world settings. Our work, in essence, signifies a broader progression in robotics: moving away from manually programming detailed models toward educating robots through observation and interaction.”

This paper merged the computer vision and self-supervised learning expertise from the Sitzmann lab and the knowledge in soft robots from the Rus lab. Li, Sitzmann, and Rus co-authored the paper with CSAIL affiliates Annan Zhang SM ’22, a PhD student in electrical engineering and computer science (EECS); Boyuan Chen, a PhD student in EECS; Hanna Matusik, an undergraduate researcher in mechanical engineering; and Chao Liu, a postdoc in the Senseable City Lab at MIT.

The research received support from the Solomon Buchsbaum Research Fund through MIT’s Research Support Committee, an MIT Presidential Fellowship, the National Science Foundation, and the Gwangju Institute of Science and Technology.

“`