Researchers at MIT have developed a periodic table that illustrates the relationships among over 20 traditional machine-learning techniques. This novel framework illuminates how scientists might integrate approaches from various methods to enhance current AI models or devise new ones.

For example, the researchers employed their framework to merge aspects of two distinct algorithms, resulting in a new image-classification algorithm that outperformed existing leading methods by 8 percent.

The periodic table is based on a fundamental principle: All these algorithms discern a particular type of association between data points. Although each algorithm may achieve this in a slightly different manner, the foundational mathematics of each method remains consistent.

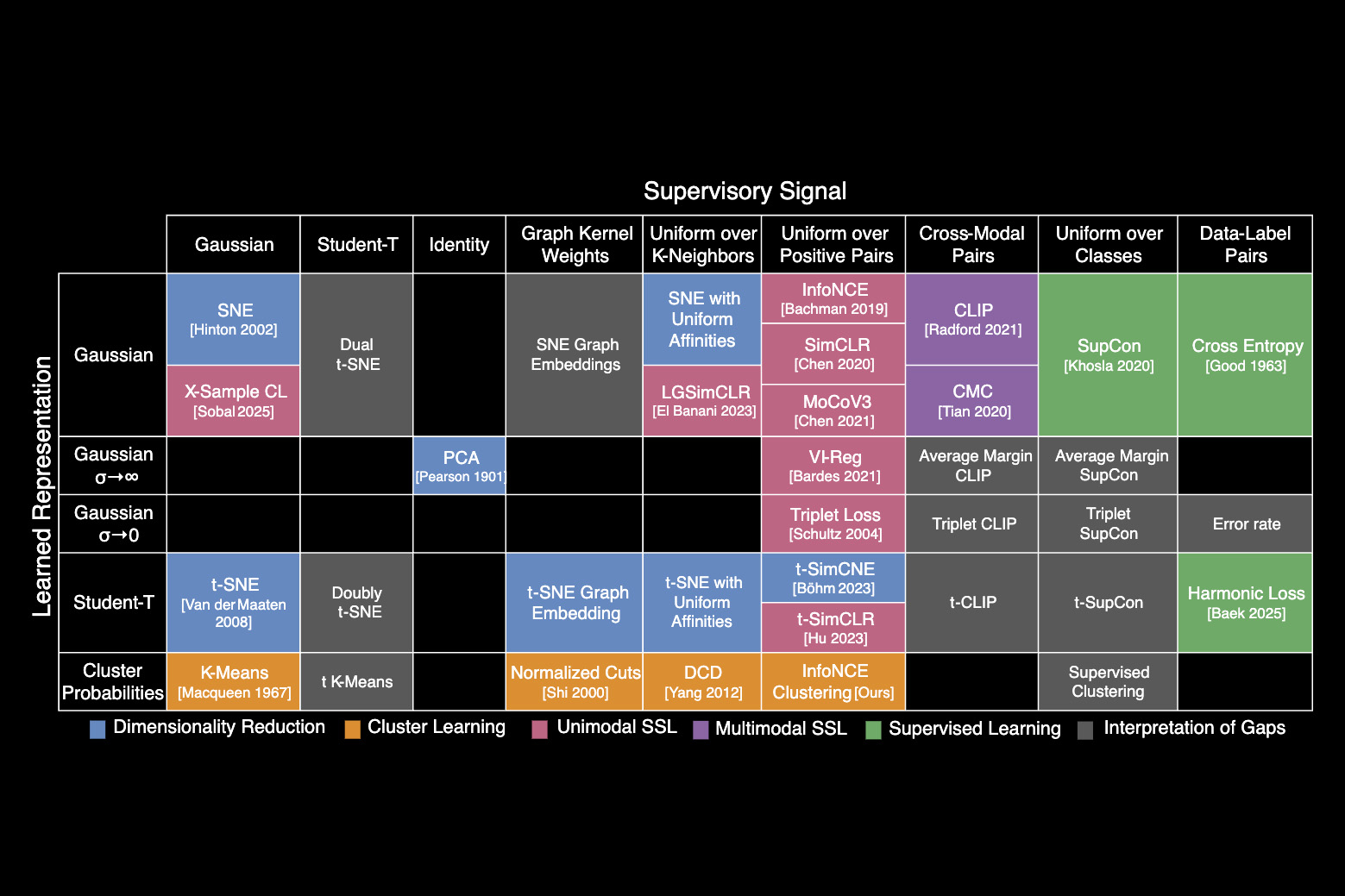

Building on these revelations, the researchers discovered a unifying equation that supports many traditional AI algorithms. They utilized this equation to reinterpret well-known techniques and organized them into a table, categorizing each according to the types of associations it learns.

Similar to the periodic table of chemical elements, which originally contained empty squares that scientists later filled, the periodic table of machine learning also has unoccupied spaces. These vacancies predict where algorithms might belong, yet remain uncharted.

The table provides researchers with a toolkit to craft new algorithms without having to rehash concepts from previous methods, says Shaden Alshammari, an MIT graduate student and the main author of a study on this innovative framework.

“It’s not merely a metaphor,” Alshammari emphasizes. “We are starting to perceive machine learning as a structured system that represents a domain we can explore rather than just navigating haphazardly.”

She is joined in the study by John Hershey, a researcher at Google AI Perception; Axel Feldmann, an MIT graduate student; William Freeman, the Thomas and Gerd Perkins Professor of Electrical Engineering and Computer Science and a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL); and senior author Mark Hamilton, also an MIT graduate student and senior engineering manager at Microsoft. This research will be showcased at the International Conference on Learning Representations.

An unintentional equation

The researchers did not aim to establish a periodic table of machine learning.

Upon joining the Freeman Lab, Alshammari began examining clustering, a machine-learning method that categorizes images by learning to group similar images into adjacent clusters.

She recognized that the clustering algorithm she analyzed bore similarities to another established machine-learning technique, known as contrastive learning, and started exploring the mathematics in greater depth. Alshammari discovered that these two seemingly unrelated algorithms could be reframed using the same underlying equation.

“We stumbled upon this unifying equation almost by chance. Once Shaden uncovered that it linked two methods, we began envisioning new techniques to incorporate into this framework. Practically every method we considered could be integrated,” Hamilton remarks.

The framework they devised, known as information contrastive learning (I-Con), illustrates how a diverse array of algorithms can be examined through the perspective of this unifying equation. It encompasses everything from classification algorithms that can identify spam to the deep learning algorithms powering LLMs.

The equation illustrates how these algorithms establish links between actual data points and then internally approximate those connections.

Each algorithm aims to reduce the discrepancy between the approximated connections it learns and the genuine connections within its training data.

They opted to structure I-Con into a periodic table to organize algorithms based on how data points are interconnected in real datasets and the primary methods through which algorithms can approximate those connections.

“The progress was gradual, but once we pinpointed the general structure of this equation, it became easier to incorporate additional methods into our framework,” Alshammari explains.

A tool for exploration

As they assembled the table, the researchers began to identify voids where algorithms might fit, yet had not yet been conceived.

To address one such void, the researchers drew inspiration from a machine-learning approach called contrastive learning and applied it to image clustering. This innovation led to a new algorithm capable of classifying unlabeled images with 8 percent greater accuracy than another top-tier method.

They also leveraged I-Con to demonstrate how a data debiasing technique designed for contrastive learning could enhance the precision of clustering algorithms.

Moreover, the adaptable periodic table enables researchers to introduce new rows and columns to represent further types of data point connections.

Ultimately, utilizing I-Con as a compass could empower machine learning scientists to think innovatively, prompting them to merge ideas in ways they might not have otherwise considered, Hamilton states.

“We’ve illustrated that just one elegantly crafted equation, grounded in the science of information, yields rich algorithms spanning a century of machine learning research. This paves the way for numerous new avenues of exploration,” he adds.

“Perhaps the most daunting aspect of being a machine-learning researcher today is the seemingly limitless influx of papers published annually. In this landscape, papers that unify and connect existing algorithms hold significant importance, yet they are exceedingly rare. I-Con serves as a prime example of such a cohesive approach and hopefully will motivate others to apply a similar methodology in other realms of machine learning,” comments Yair Weiss, a professor in the School of Computer Science and Engineering at the Hebrew University of Jerusalem, who did not participate in this research.

This research received partial funding from the Air Force Artificial Intelligence Accelerator, the National Science Foundation AI Institute for Artificial Intelligence and Fundamental Interactions, and Quanta Computer.