“`html

Individuals with blindness and low vision have long been excluded from three-dimensional modeling applications, which rely heavily on sighted users for manipulating, rotating, and examining shapes onscreen.

Currently, a collaborative research group from multiple universities has engineered A11yShape, an innovative utility aimed at enabling blind and low-vision programmers to autonomously create, inspect, and enhance three-dimensional models.

The team includes Anhong Guo, an assistant professor of electrical engineering and computer science at the University of Michigan, alongside researchers from the University of Texas at Dallas, University of Washington, Purdue University, and various partner institutions—including Gene S-H Kim of Stanford University, who is a member of the blind and low-vision community.

A11yShape integrates the code-based 3D modeling editor OpenSCAD with the advanced language model GPT-4o. OpenSCAD allows users to construct 3D shapes by entering code instead of moving objects with a mouse. For instance: cylinder(h=20, d=5) instantly generates a cylinder that is 20 units high and 5 units in diameter, which can be rotated and prepared for 3D printing.

However, for users with blindness, that displayed model is merely a silent image on the screen. They are able to write code but lack a method to perceive whether the shape is tall or short, the placement of components, or if something is misaligned.

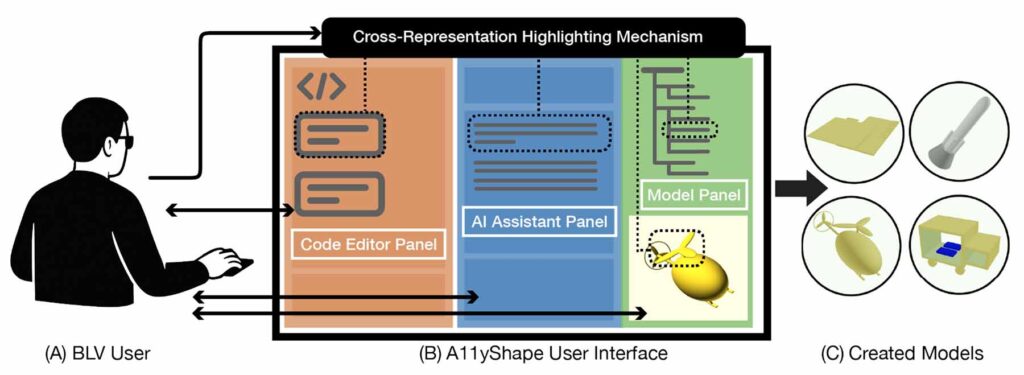

A11yShape bridges that gap by serving as the user’s “vision.” Each time a blind or low-vision user writes OpenSCAD code, A11yShape:

- Displays the 3D model from multiple perspectives—top, bottom, left, right, front, and rear—to provide a comprehensive visual overview of the object.

- Incorporates both the code and these visual perspectives into GPT-4o. Since GPT-4o can analyze text and images concurrently, it generates simple language descriptions for each component’s size, shape, and position (“A tall narrow cylinder stands upright in the center attached to a cube at its base”) and is capable of answering inquiries (“What is the width of the base?”) or recommending code modifications.

- Aligns selections across all views. Through a “cross-representation highlighting” method, when a user selects (using a keyboard or screen reader) “wing” in the outline, A11yShape simultaneously highlights the wing’s code, its description, and its rendered placement. The system also logs every change—both user edits and AI-suggested modifications—in separate records, allowing users to revert or compare versions.

Collectively, these functionalities grant blind and low-vision programmers four interconnected means of comprehending a model—code, AI-generated description, semantic hierarchy, and visual rendering—empowering them to construct and modify designs autonomously.

To assess A11yShape, the team conducted a multi-session study with four blind or low-vision programmers, none of whom had previous experience in 3D modeling. Following an introductory tutorial, each participant utilized the system over three sessions to create 12 models, including a Tanghulu skewer, robots, a rocket, and a helicopter.

All four participants successfully completed both guided and independent 3D modeling tasks with A11yShape. They reported an average System Usability Scale score of 80.6, a commendable mark for usability. One participant stated, “I had never modeled before and didn’t think I could. … It offered us (the BLV community) a new viewpoint on 3D modeling, illustrating that we can indeed construct relatively simple structures.”

The study highlighted varied workflows. Some participants coded most of their work independently, utilizing the AI chiefly for descriptions. Others depended on the AI to generate an initial model and then manually refined it. All utilized the version control and hierarchical navigation to rectify errors and find components of their models.

Nonetheless, challenges persisted. Lengthy textual descriptions sometimes resulted in cognitive overload. Participants also faced difficulties in assessing precise spatial relationships without tactile feedback, leading to sporadic misalignments, such as propellers hovering above a helicopter’s body.

Despite its drawbacks, the researchers assert that A11yShape signifies a significant advancement in creative tools for accessibility. By interlinking code, descriptions, structure, and rendering, the system empowers blind and low-vision users to independently create and modify artifacts that were previously only achievable with sighted assistance.

Future iterations may include more succinct AI descriptions, code auto-completion capabilities, and integration with tactile displays or 3D printing to offer physical feedback.

“Our ambition for A11yShape is to unlock a pathway for blind and low-vision creators to venture into a realm of creative pursuits, such as 3D modeling, and to transform what once appeared unattainable into reality,” explained Liang He, a researcher at the University of Texas at Dallas.

“We’re merely at the outset,” Guo stated. “Our aspiration is that this method will not only render 3D modeling more accessible but also ignite similar innovations across other creative fields.”

“`