Drone performances are becoming a more prevalent method of showcasing large-scale luminosity displays. These events feature hundreds to thousands of flying devices, each designed to traverse specific trajectories that collectively create elaborate shapes and patterns in the air. When executed flawlessly, drone performances can be breathtaking. However, when one or more drones fail, as recently observed in Florida, New York, and other locations, they can pose significant risks to the audience below.

Drone performance incidents underscore the difficulties of ensuring safety in what engineers term “multiagent systems” — systems involving numerous coordinated, collaborative, and computer-controlled entities, such as robots, drones, and autonomous vehicles.

Now, a group of MIT engineers has devised a training technique for multiagent systems capable of guaranteeing their safe functioning in busy environments. The researchers discovered that once this method is employed to prepare a limited number of agents, the learned safety precautions and control measures can automatically extend to any increased number of agents, ensuring the overall safety of the system.

In practical demonstrations, the team trained a small number of compact drones to effectively accomplish various tasks, including switching locations midflight and landing on designated moving vehicles on the ground. In their simulations, the researchers illustrated that the same training programs, initially developed for a few drones, could be replicated and scaled up to encompass thousands of drones, allowing a large array of agents to safely undertake the same activities.

“This could establish a standard for any application necessitating a team of agents, such as warehouse robots, search-and-rescue drones, and autonomous vehicles,” states Chuchu Fan, associate professor of aeronautics and astronautics at MIT. “This serves as a safety shield, indicating that each agent can proceed with its mission, while we provide guidance on how to maintain safety.”

Fan and her collaborators discuss their innovative approach in a research paper featured this month in the journal IEEE Transactions on Robotics. The co-authors of the study include MIT graduate students Songyuan Zhang and Oswin So, along with former MIT postdoctoral researcher Kunal Garg, who is currently an assistant professor at Arizona State University.

Safety Margins

When engineers design for safety in any multiagent system, they generally must consider the potential trajectories of each agent in relation to every other agent present. This pairwise path-planning process can be labor-intensive and computationally demanding. Moreover, even with meticulous planning, safety cannot be assured.

“During a drone performance, each drone is assigned a specific path — a collection of waypoints and corresponding times — and then they effectively close their eyes and adhere to the plan,” explains Zhang, the leading author of the study. “Because they are only aware of where they need to be and at what time, they lack the ability to adapt to unforeseen circumstances.”

The MIT team sought to create a technique to train a minimal number of agents to navigate safely in a manner that could efficiently scale to any quantity of agents in the system. Instead of planning explicit paths for each individual agent, the technique would allow agents to continuously assess their safety margins, or thresholds which signify unsafe conditions. Consequently, an agent could take any number of routes to complete its assignment, as long as it remains within its safety boundaries.

In some respects, the team suggests this technique resembles how humans instinctively navigate their environment.

“Imagine being in a crowded shopping center,” says So. “You don’t focus on people beyond those in your immediate vicinity, like the 5 meters around you, in terms of moving about safely and avoiding collisions. Our approach adopts a similar localized perspective.”

Safety Barrier

In their recent study, the team introduces their method, GCBF+, which stands for “Graph Control Barrier Function.” A barrier function is a mathematical concept in robotics that determines a kind of safety barrier, or a boundary indicating a high risk of danger for an agent. For each agent, this safety area may vary in real-time as the agent navigates through a dynamic environment populated with other moving agents.

When designers calculate barrier functions for any single agent within a multiagent framework, they typically must account for the possible paths and interactions of each other agent within the system. Conversely, the MIT team’s approach computes the safety zones for just a limited number of agents, in a manner precise enough to reflect the dynamics of a greater number of agents in the system.

“Then we can essentially duplicate this barrier function for every single agent, resulting in a network of safety zones that is applicable for any number of agents in the system,” So remarks.

To compute an agent’s barrier function, the team’s approach first considers an agent’s “sensing radius,” which defines the extent of its observable environment based on its sensor capabilities. Much like the shopping center analogy, the researchers assume that they only need to consider agents within its sensing radius, concerning safety and collision avoidance.

Next, utilizing computational models that encapsulate an agent’s specific mechanical capabilities and limitations, the team simulates a “controller,” or a series of commands outlining how the agent and other similar agents should navigate. They then perform simulations of multiple agents following designated paths and document how and whether they collide or interact in other ways.

“Once we gather these trajectories, we can formulate laws we want to minimize, such as the total number of safety breaches present in the current controller,” states Zhang. “Then we enhance the controller to increase safety.”

In this manner, a controller can be encoded into actual agents, enabling them to continuously evaluate their safety zones based on the other agents they can detect in their immediate vicinity, allowing them to navigate within that safety area to fulfill their tasks.

“Our controller is responsive,” Fan explains. “We do not predefine a path ahead of time. Our controller is always collecting data on the agent’s trajectory, its speed, and the velocities of other drones. It employs all this information to generate an adaptive plan in real-time and adjusts accordingly. Thus, if conditions change, it can adapt to maintain safety.”

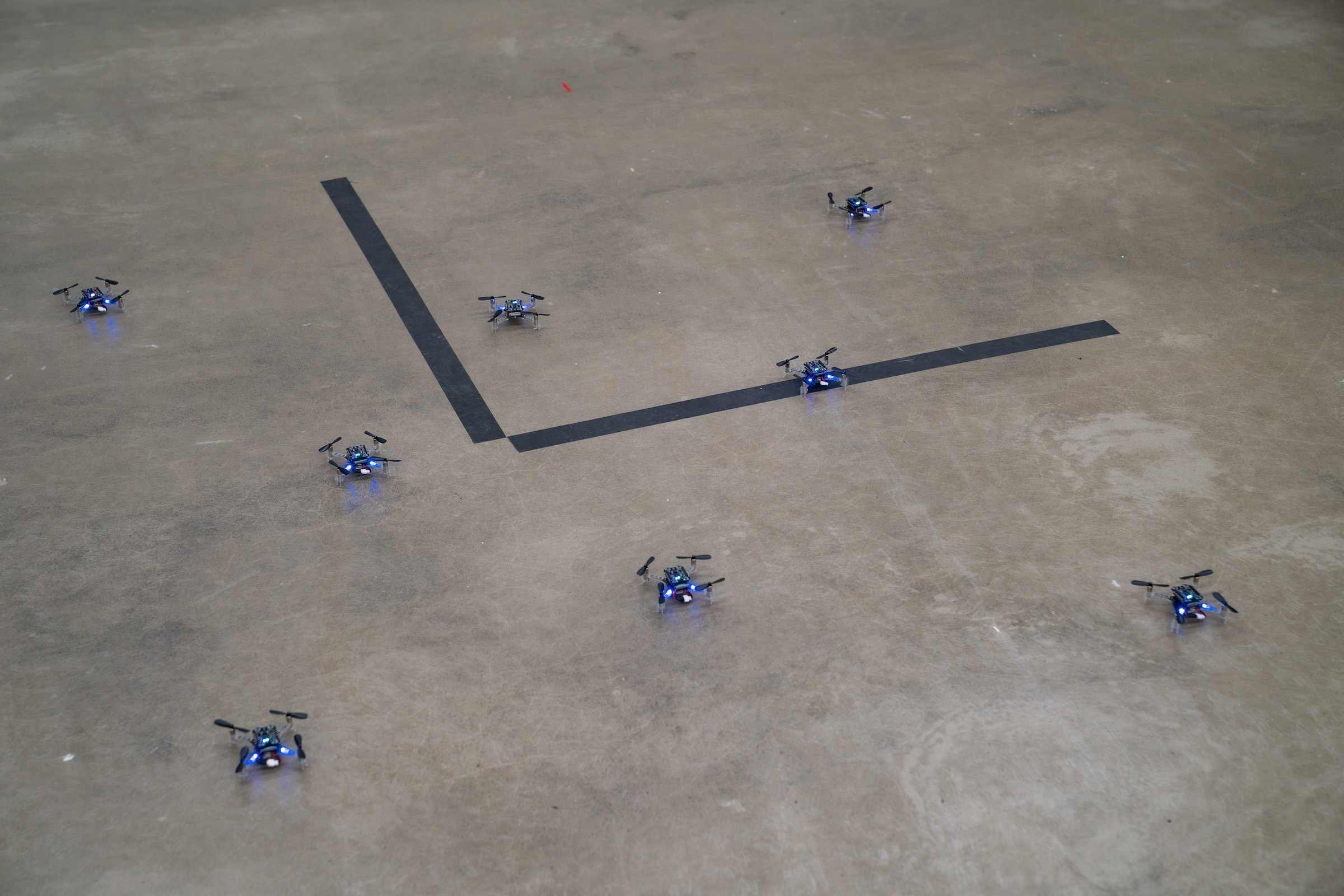

The team successfully implemented GCBF+ using a system of eight Crazyflies — lightweight, palm-sized quadcopters that they instructed to fly and change positions mid-air. If the drones attempted to follow the most direct route, they would certainly collide. However, after training with the team’s technique, the drones managed to make real-time modifications to navigate around one another, staying within their designated safety zones to seamlessly switch positions in flight.

Similarly, the team instructed the drones to fly around and then land on specific Turtlebots — mobile robots with dome-like tops. The Turtlebots continuously traveled in a large circle, and the Crazyflies successfully avoided colliding with each other as they executed their landings.

“Utilizing our framework, we only need to provide the drones with their destination points rather than the complete collision-free path, allowing them to determine how to reach their destinations without collisions on their own,” says Fan, who envisions this method could be suitable for any multiagent framework to ensure its safety, including collision avoidance systems in drone performances, warehouse automation, autonomous driving technology, and drone delivery services.

This research was partially financed by the U.S. National Science Foundation, MIT Lincoln Laboratory through the Safety in Aerobatic Flight Regimes (SAFR) initiative, and the Defence Science and Technology Agency of Singapore.