“`html

Imagine an individual taking their French Bulldog, Bowser, to the canine park. Recognizing Bowser while he frolics with the other dogs is straightforward for the pet owner during their visit.

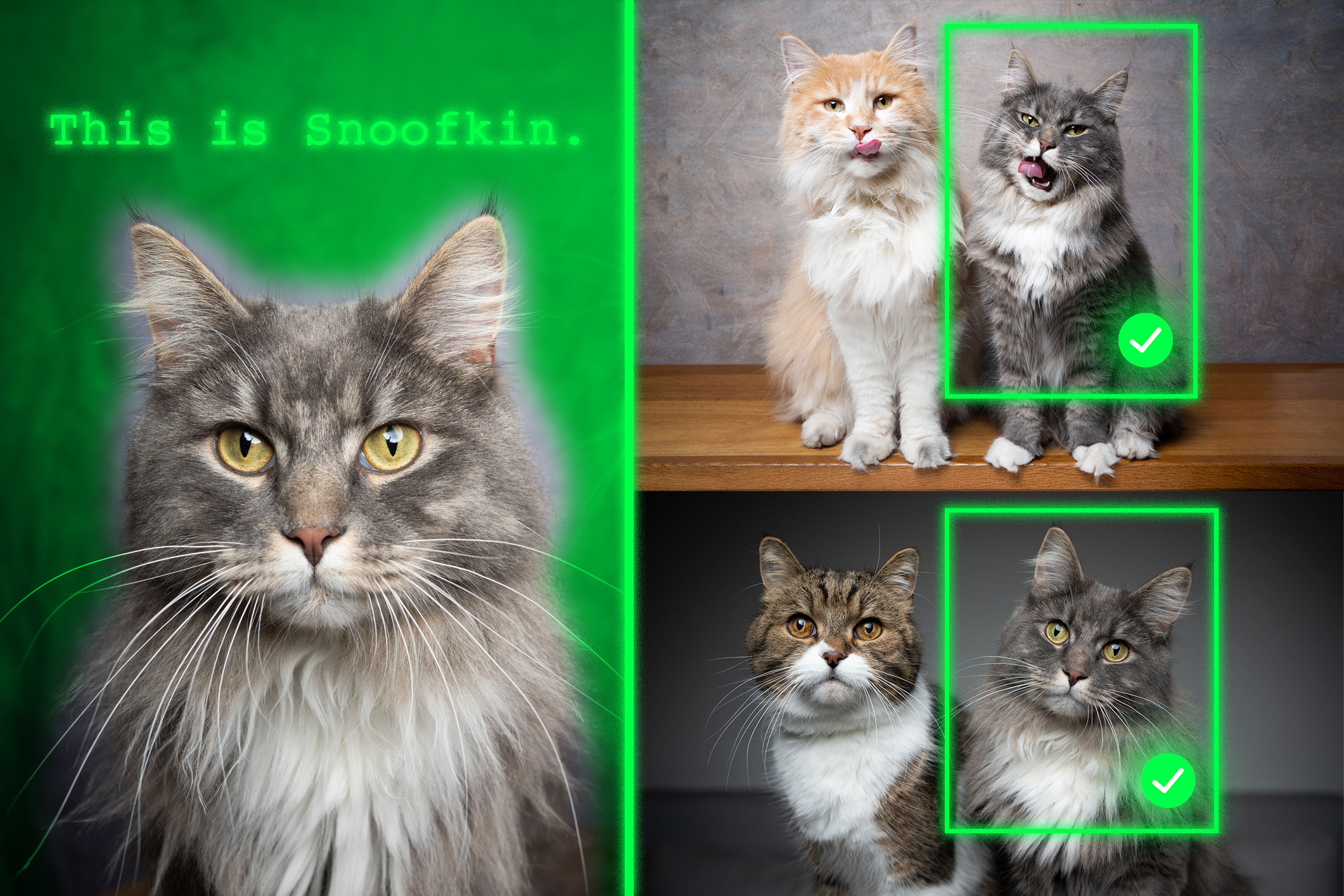

However, if a person wishes to utilize a generative AI model like GPT-5 to supervise their animal while they are at work, the model might struggle with this fundamental task. Vision-language models such as GPT-5 can often excel at identifying general items like a dog, but they tend to falter in pinpointing specific entities, such as Bowser the French Bulldog.

To overcome this limitation, researchers from MIT, the MIT-IBM Watson AI Lab, the Weizmann Institute of Science, and other institutions have developed a new training approach that instructs vision-language models to locate specific objects within a scene.

Their technique employs meticulously organized video-tracking data where the same object is followed across several frames. They constructed the dataset so the model must concentrate on contextual hints to recognize the specific object instead of depending solely on previously learned information.

When presented with several example images portraying a distinct object, like someone’s pet, the re-trained model is more proficient at pinpointing the location of that same pet in a fresh image.

Models re-trained using their approach surpassed leading systems in this task. Significantly, their method preserves the broader capabilities of the model.

This innovative strategy could assist future AI systems in tracking particular objects over time, like a child’s backpack, or in identifying items of interest, such as a specific animal species in ecological assessments. It could also support the creation of AI-driven assistive technologies that aid visually impaired individuals in locating certain objects in a space.

“Ultimately, our goal is for these models to learn from context, akin to human beings. If a model can achieve this effectively, instead of retraining it for each new assignment, we can simply provide a few examples and it will deduce how to accomplish the task based on that context. This is an exceptionally powerful capability,” states Jehanzeb Mirza, an MIT postdoctoral researcher and the lead author of a publication on this methodology.

Mirza is joined by co-lead authors Sivan Doveh, a postdoc at Stanford University who was a graduate student at the Weizmann Institute of Science during this research; Nimrod Shabtay, a researcher at IBM Research; James Glass, a senior research scientist and head of the Spoken Language Systems Group in the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL); and others. This work will be showcased at the International Conference on Computer Vision.

An unforeseen limitation

Researchers have discovered that large language models (LLMs) can thrive at contextual learning. If they provide an LLM with a few examples of a task, such as addition problems, it can learn to resolve new addition problems based on the context given.

A vision-language model (VLM) is essentially an LLM with an integrated visual component, so the MIT researchers believed it would inherit the LLM’s contextual learning abilities. However, this is not the reality.

“The research community has yet to find a clear-cut answer to this specific dilemma. The bottleneck may stem from the fact that some visual data is lost during the integration of the two components, but we simply do not know,” Mirza explains.

The investigators aimed to enhance VLMs’ capacity for in-context localization, which involves identifying a particular object in a new image. They concentrated on the data used to refine existing VLMs for new tasks, a method known as fine-tuning.

Standard fine-tuning data are collected from diverse sources and depict assortments of everyday objects. One image may display cars parked along a street, while another features a bouquet of flowers.

“There is no real consistency in this data, hence the model never learns to identify the same object across various images,” he mentions.

To rectify this issue, the researchers created a novel dataset by curating samples from existing video-tracking data. This data comprises video clips showcasing the same object moving through a scene, like a tiger traversing a grassland.

They extracted frames from these videos and organized the dataset so each input consists of multiple images displaying the same object in various contexts, accompanied by example questions and answers concerning its position.

“By utilizing several images of the same object in different contexts, we prompt the model to consistently localize that object of interest by concentrating on the context,” Mirza clarifies.

Concentrating the attention

However, the researchers discovered that VLMs have a tendency to circumvent the issue. Rather than responding based on contextual hints, they often identify the object using information acquired during pretraining.

For example, since the model has already learned that an image of a tiger and the label “tiger” are associated, it could identify the tiger crossing the grassland based on this pre-trained knowledge, rather than deducing from context.

To address this challenge, the researchers used pseudo-names instead of actual category names for objects in the dataset. In this instance, they altered the name of the tiger to “Charlie.”

“It took us some time to determine how to prevent the model from circumventing the issue. However, we changed the game for the model. The model does not realize that ‘Charlie’ could refer to a tiger, so it is compelled to focus on the context,” he notes.

The researchers also encountered obstacles in discovering the optimal method for preparing the data. If the frames are too proximate, the background might not change sufficiently to offer data variety.

Ultimately, fine-tuning VLMs with this new dataset enhanced accuracy for personalized localization by approximately 12 percent on average. When they incorporated the dataset with pseudo-names, the performance improvements reached 21 percent.

As model size increases, their approach yields even greater performance enhancements.

In the future, the researchers aim to investigate potential reasons why VLMs do not inherit in-context learning capabilities from their foundational LLMs. Additionally, they plan to explore further mechanisms to bolster the performance of a VLM without needing to retrain it with new information.

“This research redefines few-shot personalized object localization — adjusting on demand to the same object across new scenes — as an instruction-tuning challenge and employs video-tracking sequences to educate VLMs to localize based on visual context rather than class priors. It also introduces the first benchmark for this setting with significant gains across both open and proprietary VLMs. Given the considerable importance of rapid, instance-specific grounding — often without fine-tuning — for users in real-world applications (including robotics, augmented reality assistants, creative tools, etc.), the practical, data-centric methodology provided by this research can facilitate the broader adoption of vision-language foundation models,” states Saurav Jha, a postdoc at the Mila-Quebec Artificial Intelligence Institute, who was not involved in this research.

Additional co-authors include Wei Lin, a research associate at Johannes Kepler University; Eli Schwartz, a research scientist at IBM Research; Hilde Kuehne, a computer science professor at Tuebingen AI Center and an affiliated professor at the MIT-IBM Watson AI Lab; Raja Giryes, an associate professor at Tel Aviv University; Rogerio Feris, a principal scientist and manager at the MIT-IBM Watson AI Lab; Leonid Karlinsky, a principal research scientist at IBM Research; Assaf Arbelle, a senior research scientist at IBM Research; and Shimon Ullman, the Samy and Ruth Cohn Professor of Computer Science at the Weizmann Institute of Science.

This investigation was partially funded by the MIT-IBM Watson AI Lab.

“`