When visual data reaches the brain, it navigates through two pathways that address distinct elements of the input. For many years, researchers have theorized that one of these pathways, the ventral visual stream, is key in object recognition, potentially honed by evolution for that specific purpose.

In line with this notion, over the last ten years, MIT scientists have discovered that when computational frameworks of the ventral stream’s anatomy are fine-tuned for the object recognition task, they serve as impressive predictors of the neural responses in the ventral stream.

Nonetheless, in a recent investigation, MIT scholars have demonstrated that when they adapt these models for spatial tasks instead, the generated models are also strong predictors of the neural activities in the ventral stream. This indicates that the ventral stream may not be solely optimized for object recognition.

“This raises an intriguing question about what the ventral stream is actually optimized for. Many in our field lean towards the belief that the ventral stream is primarily for object recognition, but this study introduces a fresh viewpoint suggesting it could also be tailored for spatial tasks,” remarks MIT graduate student Yudi Xie.

Xie serves as the principal author of the research, which will be showcased at the International Conference on Learning Representations. The paper also includes contributions from Weichen Huang, a visiting student participating in MIT’s Research Summer Institute program; Esther Alter, a software developer at the MIT Quest for Intelligence; Jeremy Schwartz, a technical staff member in sponsored research; Joshua Tenenbaum, a professor in brain and cognitive sciences; and James DiCarlo, the Peter de Florez Professor of Brain and Cognitive Sciences, director of the Quest for Intelligence, and a member of the McGovern Institute for Brain Research at MIT.

Beyond Object Identification

When we observe an object, our visual system can not only recognize it but also discern other characteristics such as its position, its proximity to us, and its orientation in space. Since the early 1980s, neuroscientists have proposed that the primate visual system is partitioned into two pathways: the ventral stream, which handles object identification tasks, and the dorsal stream, which manages features related to spatial location.

Throughout the past decade, scientists have aimed to model the ventral stream utilizing a category of deep learning model referred to as a convolutional neural network (CNN). Researchers are able to instruct these models to execute object identification tasks by inputting datasets filled with thousands of images paired with category labels that describe the images.

The cutting-edge versions of these CNNs exhibit high success rates in categorizing images. Furthermore, researchers have discovered that the internal activations of these models bear striking similarities to the activities of neurons that process visual information within the ventral stream. Additionally, the closer these models resemble the ventral stream, the better their performance in object identification tasks. This has prompted many researchers to theorize that the primary role of the ventral stream is object recognition.

However, experimental research, notably a study conducted by the DiCarlo lab in 2016, revealed that the ventral stream appears to encode spatial attributes as well. These attributes encompass the size of the object, its orientation (how rotated it is), and its position within the visual field. Based on these findings, the MIT team sought to explore whether the ventral stream could have additional roles beyond mere object recognition.

“Our primary question in this project was whether we could conceptualize the ventral stream as being optimized for these spatial tasks rather than solely categorization tasks,” states Xie.

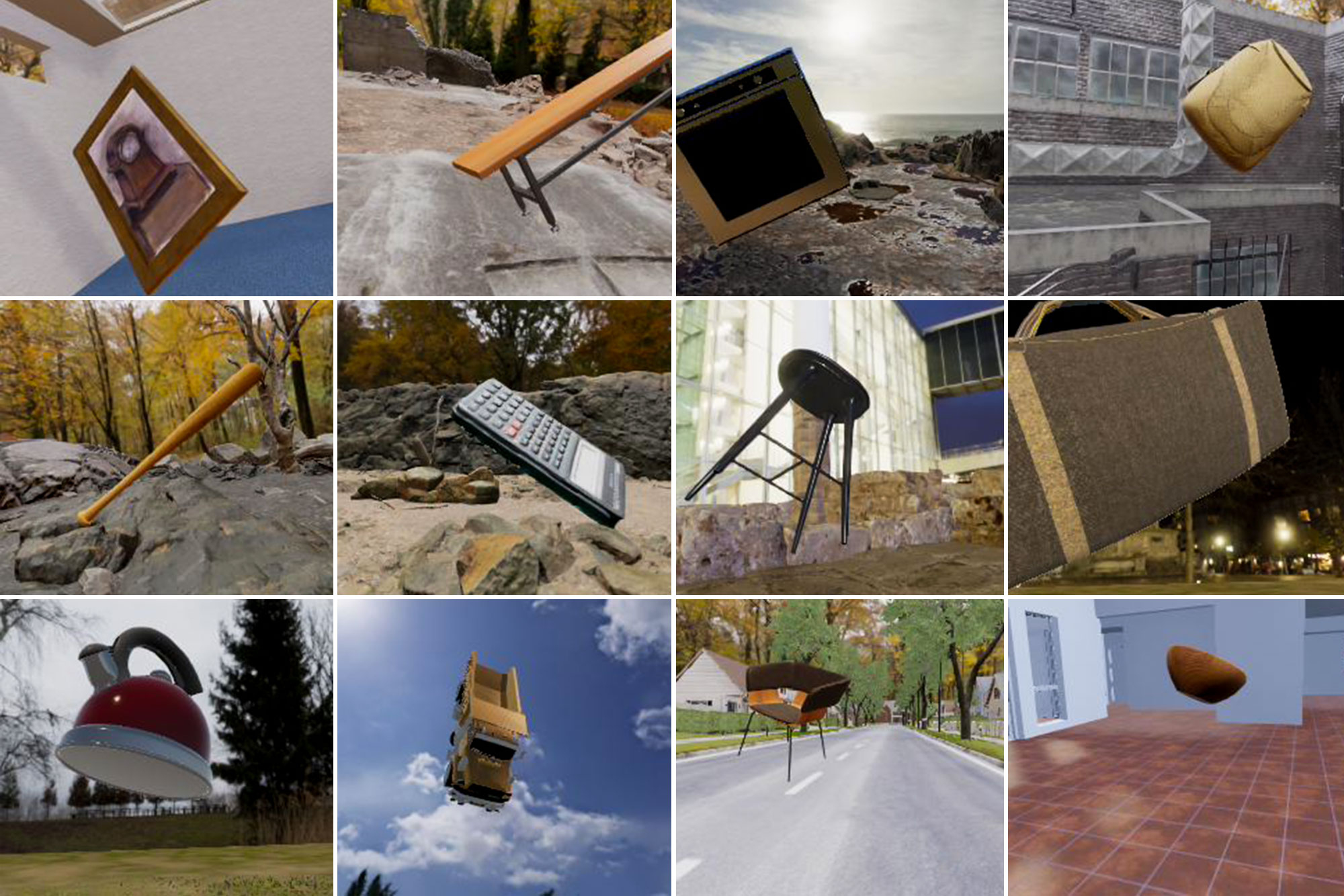

To evaluate this hypothesis, the researchers aimed to train a CNN to identify one or more spatial features of an object, including rotation, location, and distance. To educate the models, they developed a fresh dataset of synthetic images. These images depicted objects such as teapots or calculators overlaid on various backgrounds, placed in designated positions and orientations that were labeled to assist the model in learning.

The researchers found that CNNs trained on only one of these spatial features demonstrated a high level of “neuro-alignment” with the ventral stream — comparable to the levels observed in CNN models educated on object recognition.

The researchers assess neuro-alignment using a technique developed by DiCarlo’s lab, which involves asking the models, once trained, to project the neural activity that a particular image would elicit in the brain. The findings indicated that the more proficient the models were in the spatial tasks on which they had been trained, the higher their neuro-alignment.

“I believe we cannot presume that the ventral stream is engaged solely in object categorization, as many of these additional functions, such as spatial tasks, can also result in a strong correlation between models’ neuro-alignment and their effectiveness,” Xie explains. “Our conclusion indicates that you can optimize either by categorization or by executing these spatial tasks, and both approaches yield a ventral-stream-like model based on our existing metrics for evaluating neuro-alignment.”

Model Comparisons

The researchers subsequently examined why these two strategies — instructing for object recognition and for spatial features — resulted in comparable levels of neuro-alignment. They employed an analysis known as centered kernel alignment (CKA), which enables them to gauge the extent of similarity between representations in distinct CNNs. This analysis revealed that in the initial to mid-layers of the models, the representations acquired are nearly indistinguishable.

“In these early layers, essentially, you cannot differentiate these models simply by observing their representations,” Xie states. “It appears they derive a very similar or unified representation in the early to middle layers, after which they diverge to accommodate various tasks.”

The researchers theorize that even when models are trained to analyze a single feature, they may also account for “non-target” features — those they are not specifically trained on. When objects exhibit greater variability in non-target features, the models tend to develop representations that are more akin to those formed by models trained on other tasks. This suggests that the models are utilizing all the information at their disposal, potentially causing different models to produce similar representations, the researchers imply.

“Greater non-target variability actually assists the model in acquiring a better representation, rather than creating a representation that overlooks them,” Xie suggests. “It’s plausible that the models, even while focused on one target, are concurrently learning other aspects due to the variability of these non-target features.”

In upcoming research, the scholars aspire to devise new methods for comparing various models, aiming to enhance understanding of how each model creates internal representations of objects influenced by variations in training tasks and datasets.

“There may still be subtle distinctions between these models, even though our current approach to measuring how closely these models align with the brain indicates they operate on a similar level. This implies that there is still work needed to refine our comparisons of the model to the brain, allowing us to gain clearer insights into what the ventral stream is truly optimized for,” Xie concludes.

The research received funding from the Semiconductor Research Corporation and the U.S. Defense Advanced Research Projects Agency.