AI image creation — which utilizes neural networks to produce new visuals from various inputs, including textual prompts — is anticipated to evolve into a billion-dollar sector by the conclusion of this decade. Even with current technology, if you wished to craft a whimsical depiction of, for example, a friend erecting a flag on Mars or recklessly soaring into a black hole, it could be accomplished in under a second. However, prior to executing such tasks, image generators are typically trained on extensive datasets comprising millions of visuals that are frequently accompanied by corresponding text. Training these generative models can be a demanding task that spans weeks or months, consuming significant computational resources in the process.

But what if it were achievable to create images through AI techniques without employing a generator at all? That tangible possibility, along with other captivating concepts, was detailed in a research study presented at the International Conference on Machine Learning (ICML 2025), which took place in Vancouver, British Columbia, earlier this summer. The paper, outlining innovative methods for altering and generating images, was authored by Lukas Lao Beyer, a graduate student researcher in MIT’s Laboratory for Information and Decision Systems (LIDS); Tianhong Li, a postdoctoral researcher at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL); Xinlei Chen from Facebook AI Research; Sertac Karaman, an MIT professor of aeronautics and astronautics and director of LIDS; and Kaiming He, an MIT associate professor of electrical engineering and computer science.

This collaborative endeavor originated from a class project for a graduate seminar on deep generative models that Lao Beyer participated in last fall. Through discussions during that semester, it became evident to both Lao Beyer and He, who instructed the seminar, that this research possessed genuine potential that extended far beyond the limitations of a conventional homework task. Additional collaborators were soon integrated into the project.

The foundation of Lao Beyer’s inquiry was a June 2024 paper, authored by researchers from the Technical University of Munich and the Chinese company ByteDance, which introduced a novel method of representing visual data known as a one-dimensional tokenizer. Utilizing this tool, which also functions as a type of neural network, a 256×256-pixel image can be converted into a sequence of merely 32 numbers, referred to as tokens. “I aimed to grasp how such a high level of compression could be realized, and what the tokens themselves truly represented,” states Lao Beyer.

The prior generation of tokenizers would typically segment the same image into an array of 16×16 tokens — with each token encapsulating data, in a highly condensed form, that correlates to a specific section of the original image. The new 1D tokenizers can encode an image more effectively, using considerably fewer tokens overall, and these tokens are capable of encapsulating information about the entire image, not just a singular quadrant. Additionally, each token is a 12-digit number made up of 1s and 0s, allowing for 212 (or approximately 4,000) possibilities in total. “It’s akin to a vocabulary of 4,000 words that forms an abstract, hidden language spoken by the computer,” He elaborates. “It’s not comparable to human language, but we can still attempt to decipher its meaning.”

That’s precisely what Lao Beyer had originally aimed to investigate — work that provided the foundation for the ICML 2025 paper. The method he adopted was quite straightforward. If you wish to determine the function of a specific token, Lao Beyer asserts, “you can simply remove it, insert a random value, and observe if there is a recognizable alteration in the output.” Substituting one token, he discovered, modifies the image quality, transforming a low-resolution image to high resolution or vice versa. Another token influenced the background’s blur, while yet another affected brightness. He also identified a token associated with the “pose,” meaning that, in the image of a robin, for instance, the bird’s head could shift from right to left.

“This was an unprecedented result, as no one had previously observed visually distinguishable changes from manipulating tokens,” Lao Beyer states. The finding raised the possibility of a fresh approach to image editing. Moreover, the MIT collective has demonstrated how this process can be streamlined and automated, eliminating the need for manual token modifications, one at a time.

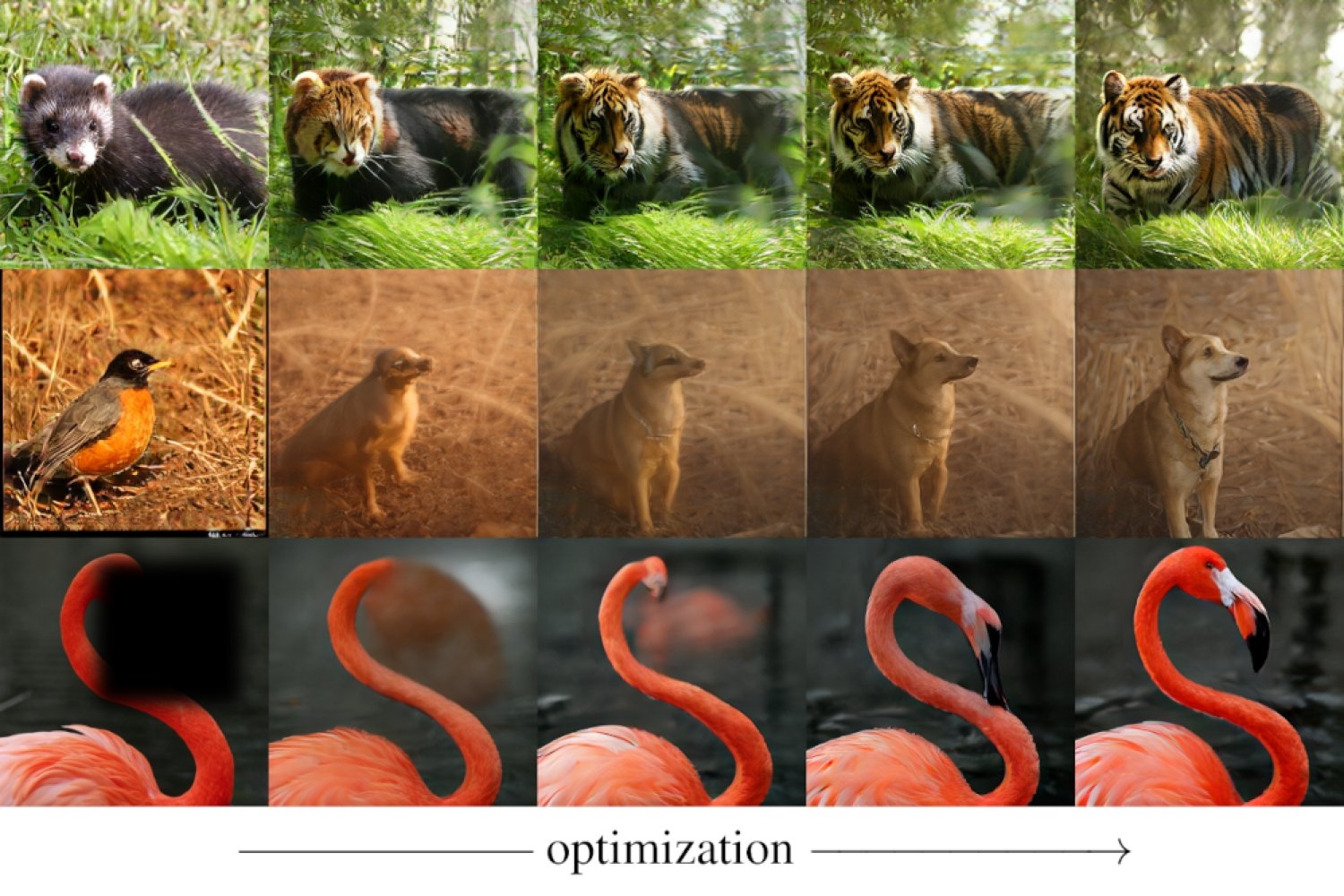

He and his associates achieved an even more significant outcome involving image creation. A system capable of generating visuals typically necessitates a tokenizer, which compresses and encodes visual data, along with a generator that can combine and arrange these compact representations to produce new images. The MIT researchers discovered a method to create images devoid of a generator entirely. Their novel approach employs a 1D tokenizer and a so-called detokenizer (also referred to as a decoder), which can reconstruct an image from a sequence of tokens. However, with the guidance from a standard neural network known as CLIP — which cannot generate images independently but can evaluate how well a specific image corresponds with a textual prompt — the team was able to transform an image of a red panda, for instance, into a tiger. Additionally, they could generate images of a tiger, or any desired form, starting entirely from scratch — from a scenario where all the tokens are initially assigned random values (and then iteratively adjusted so that the reconstructed image increasingly aligns with the desired text prompt).

The group proved that with this identical setup — relying on a tokenizer and detokenizer, yet devoid of a generator — they could also perform “inpainting,” which entails filling in elements of images that had somehow been obscured. Bypassing the use of a generator for specific tasks could lead to a notable decrease in computational expenses since generators, as previously noted, typically require substantial training.

What might appear peculiar regarding this team’s contributions, He clarifies, “is that we didn’t invent anything novel. We didn’t create a 1D tokenizer, nor did we originate the CLIP model. But we did uncover that new capabilities can emerge when you combine all these components together.”

“This work redefines the function of tokenizers,” comments Saining Xie, a computer scientist at New York University. “It illustrates that image tokenizers — tools generally utilized merely for compressing images — can indeed accomplish much more. The fact that a simple (yet highly compressed) 1D tokenizer can manage tasks like inpainting or text-guided editing, without necessitating the training of a full-fledged generative model, is rather astonishing.”

Zhuang Liu of Princeton University concurs, acknowledging that the MIT group’s work “exemplifies that we can generate and manipulate images in a manner that is significantly simpler than we previously assumed. Essentially, it demonstrates that image generation can be a byproduct of a highly effective image compressor, potentially diminishing the cost of producing images several times over.”

There could be numerous applications beyond the realm of computer vision, Karaman proposes. “For example, we could contemplate tokenizing the actions of robots or autonomous vehicles similarly, which may rapidly expand the scope of this work.”

Lao Beyer envisions along similar lines, emphasizing that the extreme degree of compression enabled by 1D tokenizers allows for “some remarkable things,” which could be applied to other sectors. For instance, in the field of autonomous vehicles, which is among his research interests, the tokens could signify, instead of visuals, the various paths that a vehicle might undertake.

Xie is also captivated by the potential applications that may arise from these groundbreaking ideas. “There are genuinely exciting use cases this could unlock,” he states.