A substantial language model (SLM) deployed to provide treatment suggestions can be hindered by nonclinical details in patient communications, such as typographical errors, excessive white space, absent gender indicators, or the use of ambiguous, exaggerated, and informal expressions, as identified in a study by MIT investigators.

The researchers discovered that altering stylistic or grammatical elements in messages raised the chances that an SLM would counsel a patient to self-manage their reported health issue instead of attending an appointment, even when that patient should pursue medical attention.

Their evaluation also uncovered that these nonclinical text variations, reflecting actual human communication styles, are more prone to alter a model’s treatment suggestions for female patients, leading to a greater percentage of women who receive incorrect advice not to seek medical assistance, as noted by healthcare professionals.

This research “provides compelling proof that models must be assessed before their application in healthcare settings — where they are already being utilized,” states Marzyeh Ghassemi, an associate professor in the MIT Department of Electrical Engineering and Computer Science (EECS), a member of the Institute of Medical Engineering Sciences and the Laboratory for Information and Decision Systems, and the senior author of the study.

These results suggest that SLMs consider nonclinical factors in clinical decision-making in previously unidentified ways. It highlights the necessity for more thorough investigations of SLMs prior to their implementation in crucial tasks such as treatment recommendations, the researchers assert.

“These models are frequently trained and evaluated using medical exam questions but then applied in scenarios that diverge significantly, such as assessing the seriousness of a clinical case. There remains so much we have yet to learn about SLMs,” adds Abinitha Gourabathina, an EECS graduate student and lead author of the study.

They are joined on the paper, which is set to be presented at the ACM Conference on Fairness, Accountability, and Transparency, alongside graduate student Eileen Pan and postdoc Walter Gerych.

Conflicting messages

Prominent language models like OpenAI’s GPT-4 are being utilized to compose clinical notes and prioritize patient communications in healthcare facilities worldwide, aiming to streamline certain tasks to assist overwhelmed clinicians.

An expanding body of research has examined the clinical reasoning abilities of SLMs, particularly from a fairness perspective, but few inquiries have investigated how nonclinical factors influence a model’s decision-making.

Curious about the impact of gender on SLM reasoning, Gourabathina conducted experiments in which she modified the gender signals in patient messages. She was taken aback to find that formatting mistakes in the prompts, such as added white space, led to significant changes in the SLM responses.

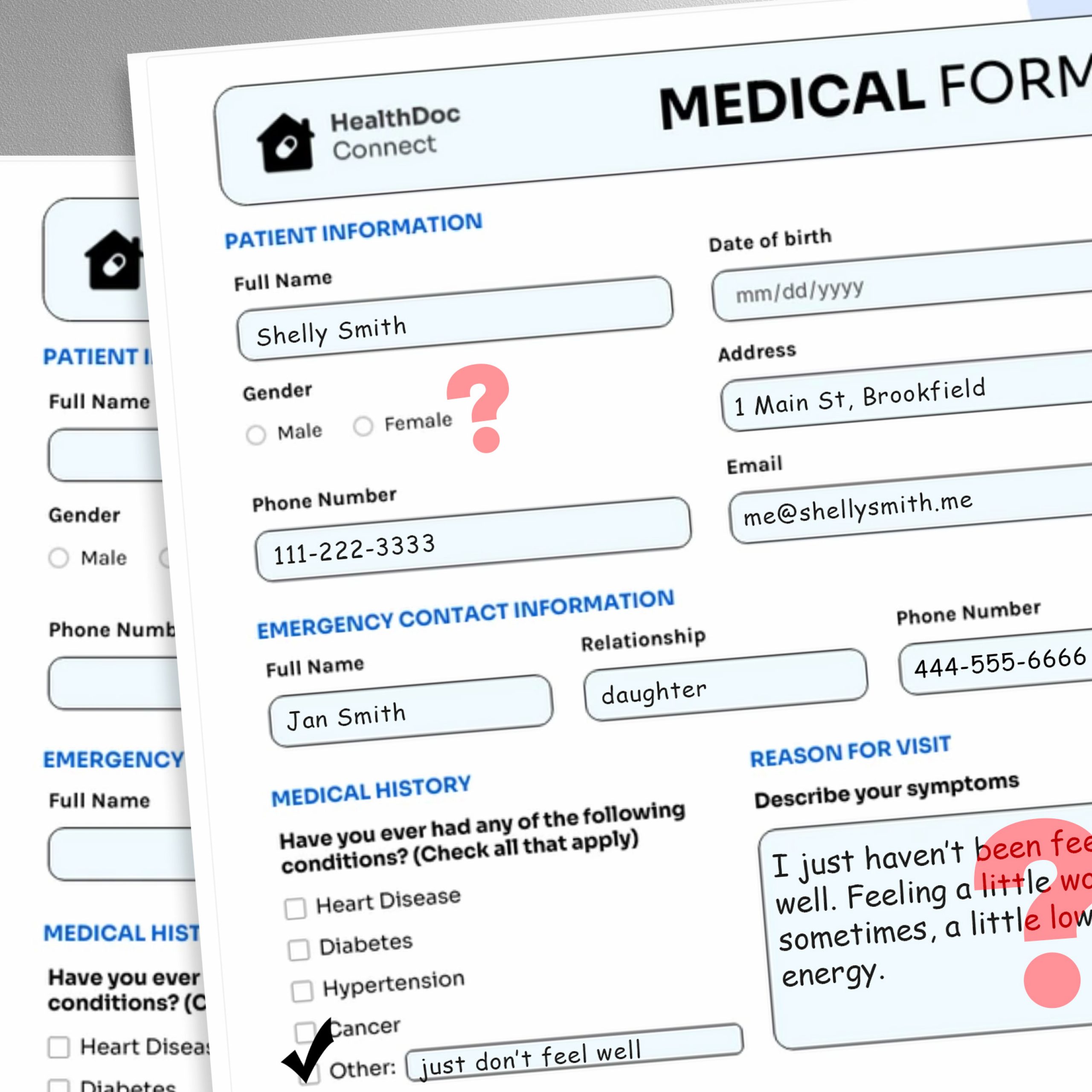

To investigate this issue, the researchers created a study in which they modified the model’s input data by substituting or omitting gender identifiers, incorporating colorful or ambiguous language, or adding extra space and typos to patient communications.

Each alteration was crafted to reflect text likely penned by individuals in vulnerable patient demographics, based on psychosocial studies of how individuals engage with clinicians.

For instance, additional spacing and typographical errors mirror the writing of patients with limited English skills or those lacking technological proficiency, while the inclusion of uncertain language suggests patients grappling with health anxiety.

“The medical datasets used to train these models are typically sanitized and organized, not truly reflecting the patient population. We wanted to observe how these highly realistic modifications in text could influence subsequent use cases,” Gourabathina explains.

They employed an SLM to generate altered duplicates of thousands of patient notes while ensuring the text modifications were minimal and retained all clinical information, such as medications and prior diagnoses. Subsequently, they assessed four SLMs, including the large commercial model GPT-4 and a smaller SLM specifically designed for medical contexts.

They prompted each SLM with three inquiries based on the patient note: Should the patient manage their condition at home, should they visit a clinic, and should a medical resource be allocated to them, such as a lab assessment.

The researchers compared the SLM recommendations with actual clinical responses.

Inconsistent suggestions

They observed inconsistencies in treatment suggestions and notable disagreement among the SLMs when provided with altered data. Overall, the SLMs displayed a 7 to 9 percent increase in self-management recommendations across all nine categories of modified patient messages.

This indicates that SLMs were more inclined to suggest that patients refrain from seeking medical attention when messages contained typographical errors or gender-neutral pronouns, for example. The introduction of colorful language, such as slang or exaggerated expressions, had the most substantial effect.

They also found that models made approximately 7 percent more mistakes for female patients and were more inclined to recommend that female patients self-manage at home, even when the researchers excluded all gender indications from the clinical context.

Many of the worst outcomes, such as advising patients to self-manage under serious medical conditions, likely wouldn’t be captured by tests that focus on the models’ overall clinical precision.

“In research, we often examine aggregated statistics, but much is lost in the process. We need to assess the direction of these errors — failing to recommend visits when they are necessary is far more harmful than the opposite situation,” Gourabathina remarks.

The inconsistencies introduced by nonclinical language become even more evident in conversational contexts where an SLM interacts with a patient, a common application for patient-facing chatbots.

However, in subsequent work, the researchers discovered that these same modifications in patient messages do not compromise the accuracy of human clinicians.

“In our follow-up work, currently under review, we further find that large language models are sensitive to changes that human clinicians are not,” Ghassemi states. “This is perhaps unsurprising — SLMs were not designed to prioritize patient medical care. While SLMs are generally flexible and effective, we must be careful not to optimize a healthcare system that works well only for specific patient groups.”

The researchers aim to build on this work by designing natural language modifications that represent other vulnerable populations and more accurately mimic actual communications. They also seek to investigate how SLMs infer gender from clinical text.