“`html

The sea is brimming with life. However, unless you venture nearby, a significant portion of the aquatic realm can easily stay concealed. This is due to water acting as an efficient veil: Light that penetrates the ocean can refract, scatter, and swiftly diminish as it travels through the dense medium of water and reflects off the constant mist of ocean particles. This renders it incredibly difficult to seize the authentic hues of objects in the sea without capturing them up close.

Recently, a group from MIT and the Woods Hole Oceanographic Institution (WHOI) has created an image-analysis instrument that cuts through the ocean’s optical phenomena and produces visuals of underwater settings that appear as though the water had been removed, disclosing the genuine colors of an ocean scene. The team combined the color-correcting tool with a computational model that transforms images of a scene into a three-dimensional underwater “realm,” which can then be explored virtually.

The researchers have named the new instrument “SeaSplat,” referring both to its underwater usage and a technique known as 3D Gaussian splatting (3DGS), which captures images of a scene and stitches them to create a comprehensive, three-dimensional representation that can be observed in detail, from any angle.

“With SeaSplat, it can explicitly simulate what the water is doing, and as a result, it can somewhat eliminate the water, yielding enhanced 3D models of an underwater scene,” states MIT graduate student Daniel Yang.

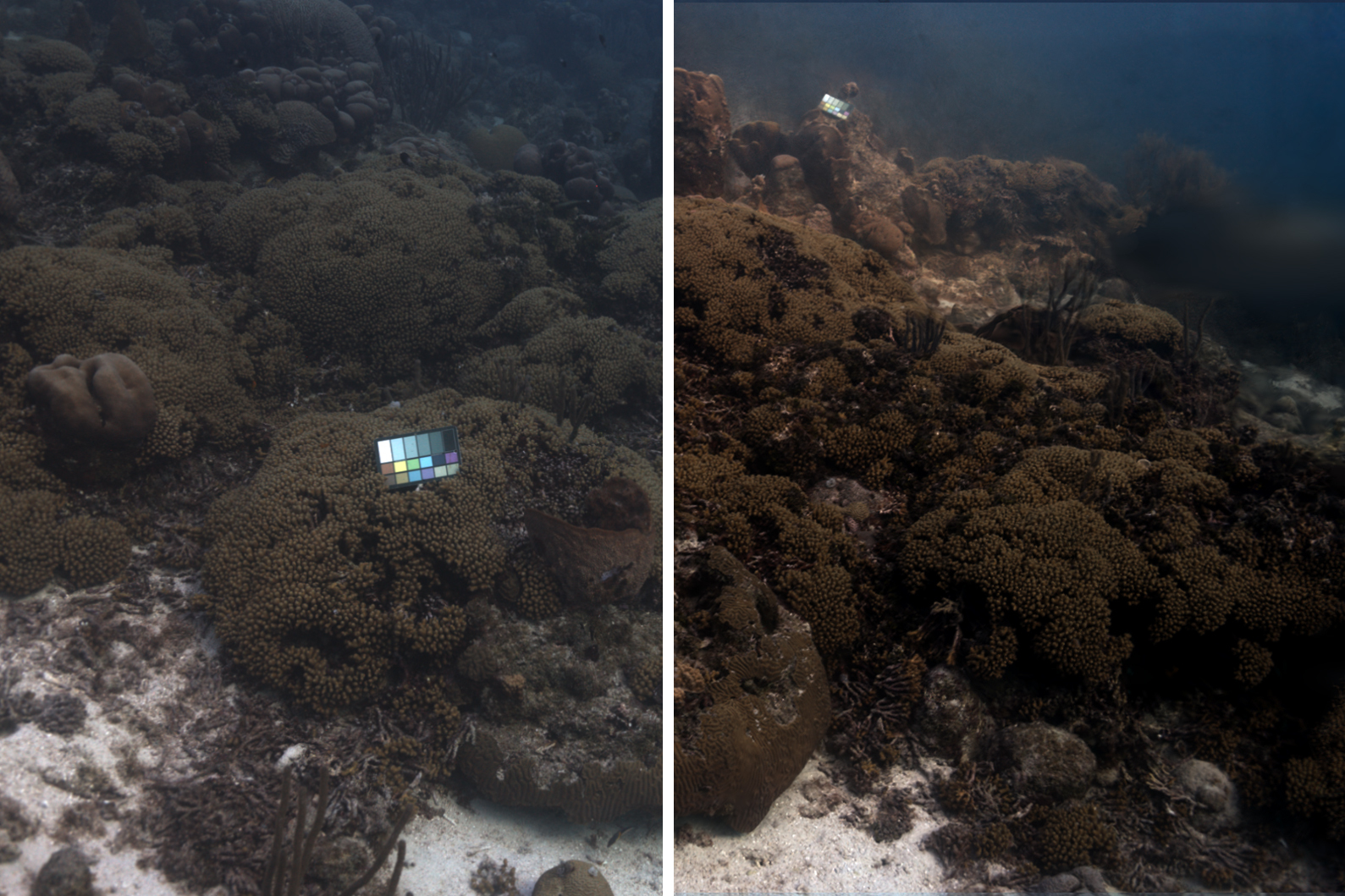

The researchers utilized SeaSplat on images of the ocean floor captured by divers and underwater vehicles, in various regions including the U.S. Virgin Islands. The technique generated 3D “realms” from the images that were more accurate, vibrant, and diverse in color compared to earlier methods.

The team believes SeaSplat could assist marine biologists in monitoring the vitality of specific ocean communities. For example, as an underwater robot investigates and photographs a coral reef, SeaSplat would concurrently process the images and create a true-color, 3D depiction, allowing scientists to virtually “navigate” through it, at their own pace and route, to evaluate the underwater scene, such as looking for signs of coral bleaching.

“Bleaching appears white up close, but may look blue and hazy from a distance, making it hard to detect,” remarks Yogesh Girdhar, an associate scientist at WHOI. “Coral bleaching and various coral species could be more easily identified with SeaSplat imagery, providing the true colors in the ocean.”

Girdhar and Yang will present a paper explaining SeaSplat at the IEEE International Conference on Robotics and Automation (ICRA). Their study includes a co-author, John Leonard, who is a professor of mechanical engineering at MIT.

Aquatic Optics

In the ocean, the hues and clarity of objects are distorted by the influence of light passing through water. In recent times, researchers have created color-correcting tools aimed at reproducing the genuine colors in the sea. These initiatives involved adapting instruments that were initially developed for terrestrial environments, for example, to uncover the true color of features in foggy situations. One recent project accurately reproduces true colors in the ocean using an algorithm labeled “Sea-Thru,” though this method demands significant computational power, complicating its use for generating 3D scene models.

Simultaneously, others have made progress in 3D Gaussian splatting, with tools that seamlessly assemble images of a scene and intelligently fill in any gaps to create a complete, 3D version of the scene. These 3D worlds enable “novel view synthesis,” allowing someone to observe the generated 3D scene, not only from the viewpoint of the original images but from any angle and distance.

However, 3DGS has only been successfully applied to environments outside of water. Attempts to adapt 3D reconstruction to underwater visuals have faced challenges, primarily due to two optical underwater effects: backscatter and attenuation. Backscatter occurs when light reflects off tiny particles in the ocean, creating a veil-like haze. Attenuation is the phenomenon by which light of specific wavelengths diminishes, or fades with distance. For instance, in the ocean, red objects fade more than blue objects when viewed from afar.

Outside of water, the colors of objects appear relatively consistent regardless of the angle or distance from which they are viewed. However, in water, colors can swiftly shift and fade depending on one’s viewpoint. When 3DGS methods try to combine underwater images into a unified 3D whole, they struggle to resolve objects due to aquatic backscatter and attenuation effects that distort the colors of objects from different angles.

“One vision of underwater robotic perception that we have is: Imagine if you could drain all the water from the ocean. What would you see?” Leonard remarks.

A Model Swim

In their latest endeavor, Yang and his team devised a color-correcting algorithm that considers the optical effects of backscatter and attenuation. The algorithm identifies the extent to which each pixel in an image has been altered by backscatter and attenuation effects, and then effectively removes those aquatic influences, determining what the pixel’s true color must be.

Yang then integrated the color-correcting algorithm with a 3D Gaussian splatting model to create SeaSplat, which can quickly analyze underwater images of a scene and produce a true-color, 3D virtual version of the same scene that can be explored in detail from any angle and distance.

The team tested SeaSplat on numerous underwater scenes, including images taken in the Red Sea, in the Caribbean off the coast of Curaçao, and the Pacific Ocean near Panama. These images, which the team extracted from a pre-existing dataset, represent various ocean locations and water conditions. They also assessed SeaSplat using images captured by a remote-controlled underwater robot in the U.S. Virgin Islands.

From the images of each ocean scene, SeaSplat created a true-color 3D world that the researchers could digitally explore, for example by zooming in and out of a scene and observing specific features from diverse perspectives. Even when viewed from varying angles and distances, they found that objects in every scene maintained their true colors, rather than fading as they would when seen through the actual ocean.

“Once it generates a 3D model, a scientist can simply ‘swim’ through the model as if they are scuba-diving and examine things in high detail, with authentic colors,” Yang states.

For the moment, the method necessitates substantial computing resources in the form of a desktop computer that would be too cumbersome to transport aboard an underwater robot. Nevertheless, SeaSplat could function for tethered operations, where a vehicle, linked to a ship, can navigate and capture images that can be transmitted to a ship’s computer.

“This is the inaugural approach that can swiftly create high-quality 3D models with precise colors, underwater, generating and rendering them promptly,” Girdhar explains. “That will aid in quantifying biodiversity and assessing the health of coral reefs and other marine communities.”

This research was partially funded by the Investment in Science Fund at WHOI, and by the U.S. National Science Foundation.

“`