The task of uncovering molecules that possess the necessary characteristics to develop new pharmaceuticals and materials is laborious and costly, demanding significant computational power and months of human input to filter through the vast array of possible candidates.

Extensive language models (ELMs) such as ChatGPT could enhance this procedure, but allowing an ELM to comprehend and deduce information about the atoms and bonds that constitute a molecule, similar to how it interprets words within sentences, has posed a scientific challenge.

Scientists from MIT alongside the MIT-IBM Watson AI Lab devised a promising strategy that supplements an ELM with additional machine-learning frameworks termed graph-based models, specifically crafted for generating and forecasting molecular configurations.

Their approach utilizes a foundational ELM to comprehend natural language inquiries detailing desired molecular characteristics. It autonomously alternates between the foundational ELM and graph-based AI components to design the molecule, elucidate the reasoning, and formulate a detailed plan for its synthesis. It intertwines text, graph, and synthesis step generation, merging words, diagrams, and reactions into a cohesive vocabulary for the ELM to process.

When compared with existing ELM-based methodologies, this multimodal strategy produced molecules that more accurately aligned with user requirements and were more likely to possess a feasible synthesis plan, increasing the success ratio from 5 percent to 35 percent.

It exhibited superior performance compared to ELMs that exceed its size by more than tenfold and that generate molecules and synthesis pathways solely utilizing text-based formats, indicating that multimodality is essential for the efficacy of the new system.

“This could ideally serve as an end-to-end solution where we could automate the complete process of designing and constructing a molecule from inception to completion. If an ELM could provide an answer within seconds, it would represent a significant time-saving for pharmaceutical firms,” remarks Michael Sun, an MIT graduate student and co-author of a document regarding this approach.

Sun’s co-authors comprise lead author Gang Liu, a graduate student at the University of Notre Dame; Wojciech Matusik, a professor of electrical engineering and computer science at MIT who heads the Computational Design and Fabrication Group within the Computer Science and Artificial Intelligence Laboratory (CSAIL); Meng Jiang, an associate professor at the University of Notre Dame; and senior author Jie Chen, a senior research scientist and manager at the MIT-IBM Watson AI Lab. The research will be showcased at the International Conference on Learning Representations.

Best of both worlds

Large language models aren’t designed to grasp the intricacies of chemistry, which is one reason they face challenges with inverse molecular design, a method of identifying molecular structures that exhibit specific functionalities or properties.

ELMs transform text into representations known as tokens, which they utilize to sequentially anticipate the forthcoming word in a sentence. However, molecules are “graph structures,” consisting of atoms and bonds without a definitive sequence, rendering them challenging to encode as sequential text.

Conversely, advanced graph-based AI models depict atoms and molecular bonds as interlinked nodes and edges within a graph. Although these models are favored for inverse molecular design, they necessitate complex inputs, lack natural language comprehension, and produce outputs that can be hard to interpret.

The MIT scientists integrated an ELM with graph-based AI models into a cohesive framework that leverages the advantages of both paradigms.

Llamole, which stands for large language model for molecular discovery, employs a base ELM as a gatekeeper to decipher a user’s inquiry — a straightforward request for a molecule featuring specific properties.

For example, a user might be searching for a molecule capable of penetrating the blood-brain barrier and inhibiting HIV, given its molecular weight of 209 and particular bond characteristics.

As the ELM generates text in response to the inquiry, it alternates between graph modules.

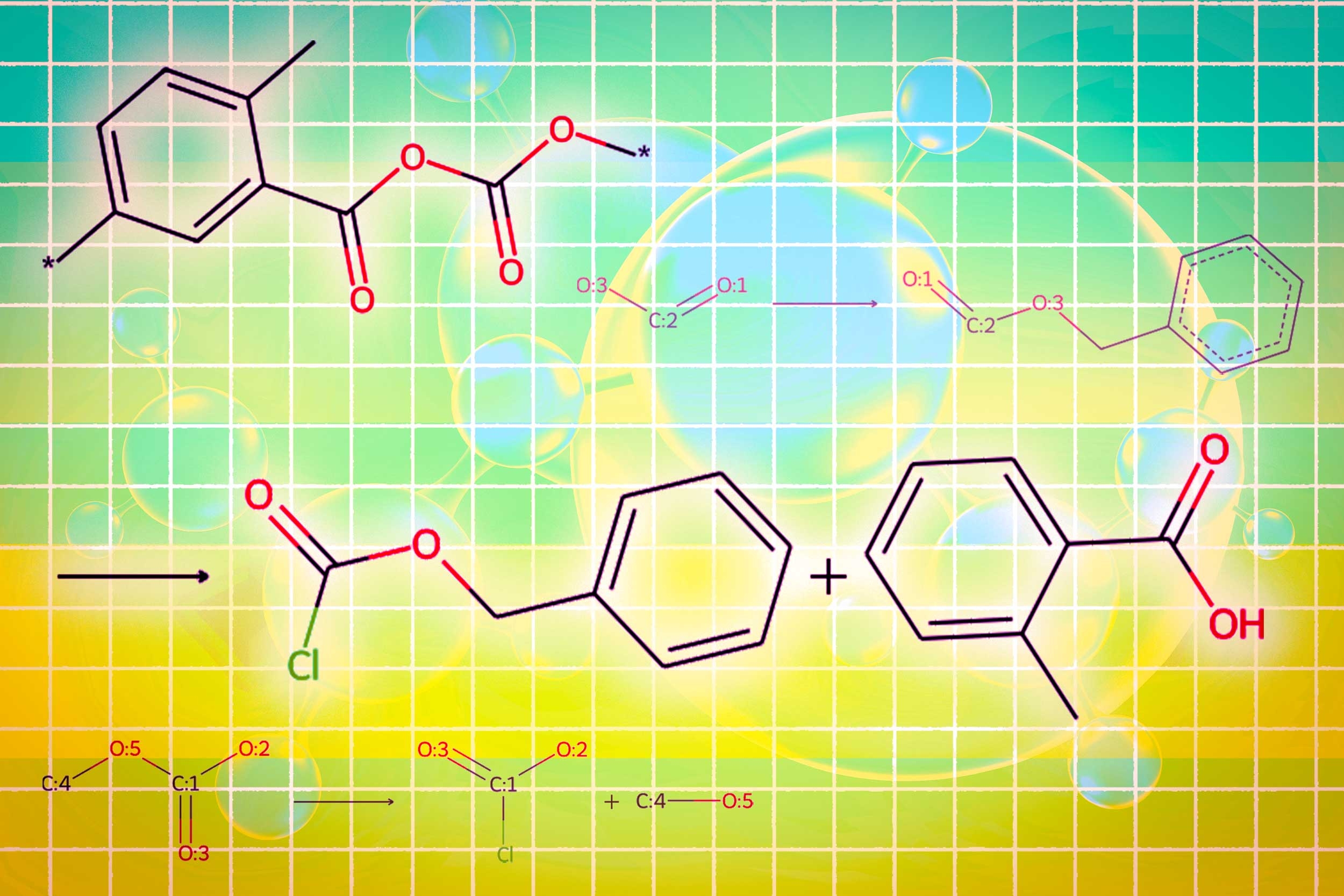

One module employs a graph diffusion model to create the molecular structure based on the input stipulations. A second module utilizes a graph neural network to convert the produced molecular structure back into tokens for the ELMs to process. The final graph module acts as a graph reaction predictor that receives an intermediate molecular structure as input and forecasts a reaction step, seeking the precise sequence of steps to construct the molecule from fundamental building blocks.

The researchers devised a novel type of trigger token that instructs the ELM when to engage each module. When the ELM forecasts a “design” trigger token, it transitions to the module that sketches a molecular structure, and upon predicting a “retro” trigger token, it switches to the retrosynthetic planning module that estimates the subsequent reaction step.

“The elegance of this system is that everything the ELM generates prior to activating a specific module is utilized by that module itself. The module learns to function consistently with what has preceded it,” says Sun.

Similarly, the outputs from each module are encoded and reintegrated into the generation process of the ELM, allowing it to comprehend what each module accomplished and enabling it to continue predicting tokens based on those insights.

Better, simpler molecular structures

Ultimately, Llamole delivers an image of the molecular configuration, a textual depiction of the molecule, and a detailed synthesis plan outlining how to produce it, down to individual chemical reactions.

In experiments focused on designing molecules that aligned with user requirements, Llamole surpassed 10 standard ELMs, four fine-tuned ELMs, and an advanced domain-specific method. Concurrently, it enhanced the retrosynthetic planning success rate from 5 percent to 35 percent by generating higher-quality molecules, characterized by simpler structures and more affordable building blocks.

“Individually, ELMs face difficulties in determining how to synthesize molecules due to the necessity for extensive multistep planning. Our method can produce superior molecular structures that are also easier to synthesize,” asserts Liu.

To train and assess Llamole, the researchers constructed two datasets from the ground up, as existing molecular structure datasets lacked sufficient detail. They enhanced hundreds of thousands of patented molecules with AI-generated natural language descriptions and tailored description templates.

The dataset they developed to refine the ELM includes templates related to 10 molecular characteristics, thus one limitation of Llamole is that it has been trained to design molecules considering only those 10 numerical properties.

In forthcoming work, the scientists aim to generalize Llamole so that it can incorporate any molecular property. Additionally, they plan to upgrade the graph modules to enhance Llamole’s retrosynthesis success rate.

Ultimately, they aspire to utilize this methodology to extend beyond molecules, creating multimodal ELMs capable of managing other forms of graph-based information, such as interconnected sensors in a power grid or transactions within a financial market.

“Llamole illustrates the potential of employing large language models as a bridge to intricate data beyond textual descriptions, and we foresee them as a cornerstone interacting with other AI algorithms to resolve any graph-based challenges,” states Chen.

This study is partially financed by the MIT-IBM Watson AI Lab, the National Science Foundation, and the Office of Naval Research.