Have you ever come across a picture of an animal and thought, “What is that creature?” TaxaBind, a novel utility created by computer scientists at the McKelvey School of Engineering, Washington University in St. Louis, can satisfy that inquiry and even more.

TaxaBind fulfills the demand for stronger and consolidated solutions to ecological challenges by integrating various models to execute species identification (What type of bear is that?), distribution mapping (Where can the cardinals be found?), and additional tasks tied to ecology. This tool can also serve as a foundation for broader research on ecological modeling, which scientists could utilize to anticipate changes in plant and animal populations, effects of climate change, or the consequences of human actions on ecosystems.

Srikumar Sastry, the principal author of the endeavor, showcased TaxaBind on March 2-3 at the IEEE/CVF Winter Conference on Applications of Computer Vision held in Tucson, Ariz.

“With TaxaBind, we are unlocking the capabilities of multiple modalities within the ecological sphere,” Sastry remarked.

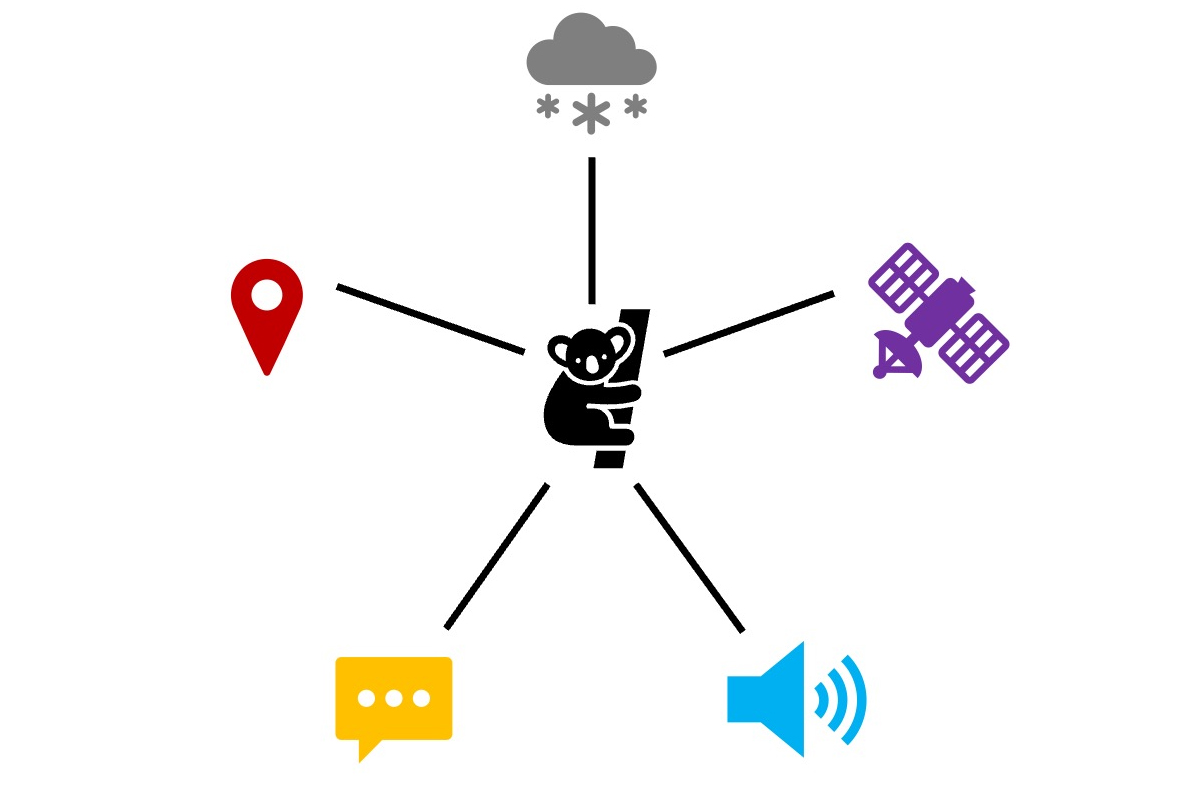

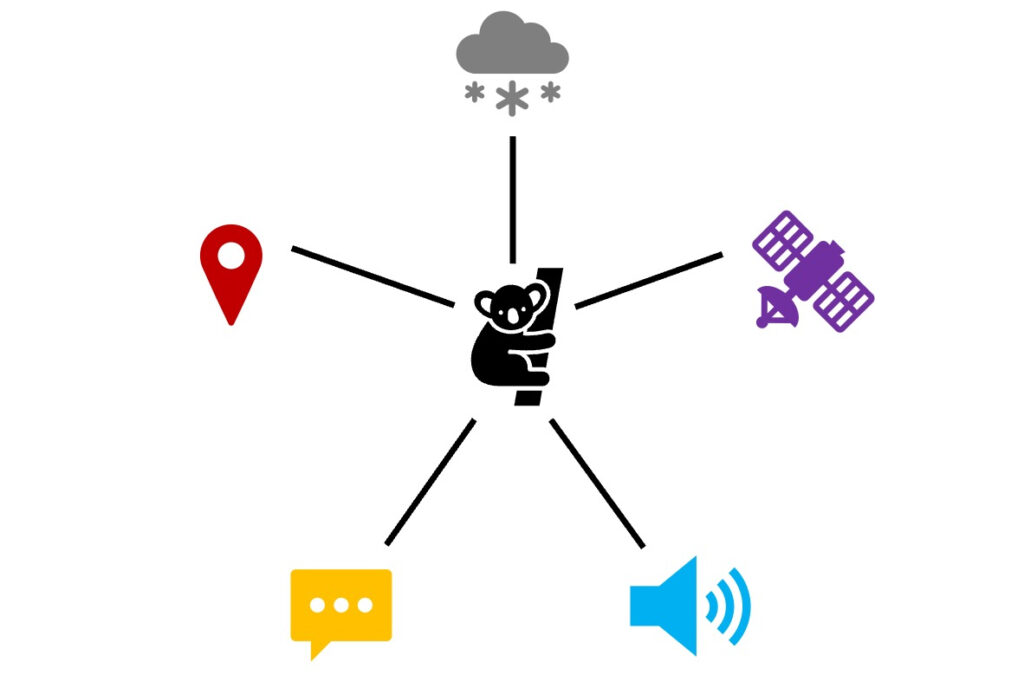

Sastry, a graduate student collaborating with Nathan Jacobs, a professor of computer science and engineering, employed a pioneering method called multimodal patching to consolidate information from varying modalities into a singular cohesive modality. Sastry likens it to the “mutual friend” that links and sustains interactions among the other five modalities.

Discover more on the McKelvey School of Engineering homepage.

The article Multimodal AI tool supports study of ecosystems first appeared on The Source.