“`html

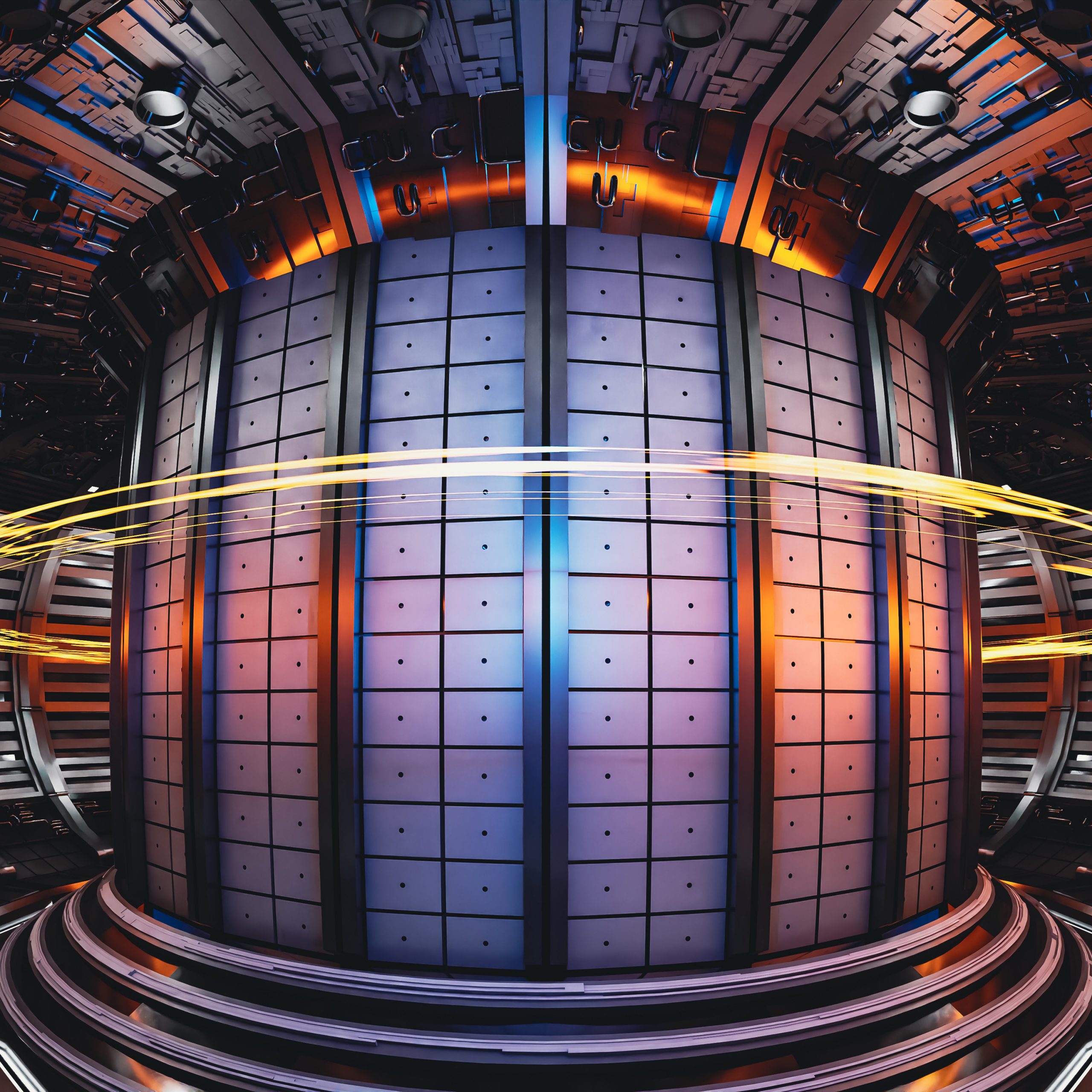

Tokamaks are devices designed to capture and utilize the energy of the sun. These fusion devices employ potent magnets to confine a plasma that is hotter than the sun’s core, facilitating the fusion of plasma atoms to release energy. If tokamaks can function securely and efficiently, these machines could eventually deliver clean and inexhaustible fusion energy.

Currently, several experimental tokamaks are operational worldwide, with additional projects in progress. Most are small-scale experimental machines constructed to explore how the devices can generate plasma and harness its energy. One major hurdle for tokamaks is determining how to safely and consistently shut down a plasma current circulating at velocities up to 100 kilometers per second, with temperatures exceeding 100 million degrees Celsius.

Such “rampdowns” are essential when a plasma starts to lose stability. To avert further disruption and potential damage to the device’s interior, operators decrease the plasma current. However, the rampdown process itself can occasionally destabilize the plasma. In some machines, rampdowns have led to scratches and scarring on the tokamak’s interior—minor impairments that still demand significant time and resources for repair.

Researchers at MIT have now created a technique to anticipate how plasma within a tokamak will behave during a rampdown. The team integrated machine-learning tools with a physics-based model of plasma dynamics to simulate the behavior of plasma and any instabilities that could occur as the plasma is reduced and turned off. They trained and validated the new model using plasma data from an experimental tokamak located in Switzerland. The results indicated that the method swiftly learned how plasma would change as it was adjusted in various ways. Moreover, the technique achieved a high level of precision with a relatively limited amount of data. This training efficiency is encouraging, considering that each experimental run of a tokamak is costly and high-quality data is scarce as a result.

The new model, which the team showcases this week in an open-access Nature Communications paper, holds potential for enhancing the safety and dependability of future fusion power facilities.

“For fusion to become a practical energy source, it must be dependable,” states lead author Allen Wang, a graduate student in aeronautics and astronautics who is part of the Disruption Group at MIT’s Plasma Science and Fusion Center (PSFC). “To ensure reliability, we must excel at managing our plasmas.”

The study’s co-authors from MIT include PSFC Principal Research Scientist and Disruptions Group leader Cristina Rea, and members of the Laboratory for Information and Decision Systems (LIDS): Oswin So, Charles Dawson, and Professor Chuchu Fan, along with Mark (Dan) Boyer of Commonwealth Fusion Systems and collaborators from the Swiss Plasma Center in Switzerland.

“A fragile balance”

Tokamaks are research fusion devices initially constructed in the Soviet Union during the 1950s. The term derives from a Russian acronym meaning “toroidal chamber with magnetic coils.” As the name suggests, a tokamak is toroidal, or donut-shaped, utilizing strong magnets to contain and accelerate a gas to temperatures and energies high enough that the atoms in the resulting plasma can fuse and release energy.

At present, tokamak experiments operate at relatively low-energy scales, with few nearing the size and output necessary for generating safe, reliable, usable energy. Disruptions in experimental, low-energy tokamaks are generally not problematic. However, as fusion devices scale up to grid dimensions, controlling significantly higher-energy plasmas in all phases will be critical to ensuring the machine’s safe and efficient functionality.

“Uncontrolled plasma terminations, even during rampdown, can produce intense thermal fluxes that harm the internal walls,” Wang observes. “Often, particularly with high-performance plasmas, rampdowns may actually push the plasma closer to certain instability limits. So, it’s a fragile balance. There’s considerable emphasis now on managing instabilities so we can routinely and reliably de-energize these plasmas safely. Yet, there have been relatively few studies on how to do this effectively.”

Reducing the pulse

Wang and his colleagues devised a model to forecast how a plasma behaves during a tokamak rampdown. Instead of merely using machine-learning tools like a neural network to identify signs of instabilities in plasma data, “you would need an astounding amount of data” for such tools to detect the subtle and transient changes in extremely high-temperature, high-energy plasmas, Wang explains.

Instead, the researchers paired a neural network with an established model that simulates plasma dynamics based on fundamental physical principles. This fusion of machine learning and a physics-based plasma simulation enabled the team to determine that only a few hundred pulses at low performance and a handful of pulses at high performance were necessary to train and validate the new model.

The data utilized for the new research came from the TCV, the Swiss “variable configuration tokamak” managed by the Swiss Plasma Center at EPFL (the Swiss Federal Institute of Technology Lausanne). The TCV is a small experimental fusion device used for research purposes, often serving as a test bed for next-generation solutions. Wang utilized data from several hundred TCV plasma pulses that encompassed properties of the plasma such as its temperature and energies during each pulse’s ramp-up, run, and ramp-down phases. He trained the model on this data and subsequently tested it, finding its capacity to accurately predict the plasma’s evolution based on the initial conditions of a particular tokamak run.

The researchers also established an algorithm to convert the model’s predictions into practical “trajectories,” or plasma-managing commands that a tokamak controller can autonomously execute, such as adjusting the magnets or temperature to maintain the plasma’s stability. They implemented the algorithm on several TCV runs and discovered that it produced trajectories that safely ramped down a plasma pulse, in some instances more swiftly and without disruptions compared to runs that did not utilize the new method.

“At some stage, the plasma will inevitably dissipate, but we call it a disruption when the plasma vanishes at high energy. In this case, we gradually reduced the energy to zero,” Wang notes. “We performed this numerous times. And we achieved better results across the board. Thus, we have statistical confidence that we improved the process.”

This work was partly supported by Commonwealth Fusion Systems (CFS), an MIT spin-off aimed at constructing the world’s first compact, grid-scale fusion energy plant. The company is developing a demonstration tokamak, SPARC, designed to produce net-energy plasma, meaning it should yield more energy than is used to heat the plasma. Wang and his colleagues are collaborating with CFS on ways that the new predictive model and similar tools can enhance the forecasting of plasma behavior and prevent costly disruptions to facilitate safe and reliable fusion energy.

“We’re striving to address the scientific questions to make fusion consistently practical,” Wang states. “What we have accomplished here marks the beginning of what remains a long journey. Yet, I believe we have made significant strides.”

Additional backing for the research was provided through the framework of the EUROfusion Consortium, under the Euratom Research and Training Program, funded by the Swiss State Secretariat for Education, Research, and Innovation.

“`